Composite AI is a multidisciplinary approach that combines multiple types of artificial intelligence, unsupervised learning, predictive models, causal reasoning, and generative AI, to create more reliable, resilient, and trustworthy AI systems. Unlike single-model approaches, composite AI provides structure, explainability, and grounded decision-making.

This blog explains what composite AI is, how it works, and why it is critical for enterprise AI reliability.

Who watches the watchmen

Generative AI has transformed how we build, deploy, and interact as we build more intelligent systems. It’s also exposed an urgent fragility: most teams can’t adequately see how these systems behave once they’re deployed in the wild. Thus, AI observability was born.

But there’s still a massive problem: most of these tools use gen AI to watch over your gen AI apps. Who’s watching the watchmen? Debugging LLM-driven agents with another LLM doesn’t solve the problem; it multiplies the uncertainty. It’s turtles all the way down!

Generative AI alone can’t guarantee reliability. Reliability requires a multidisciplinary approach combining causal, predictive, and generative intelligence to create the fast feedback loops modern AI stacks need to stay accurate, safe, and self-improving.

What Is Composite AI?

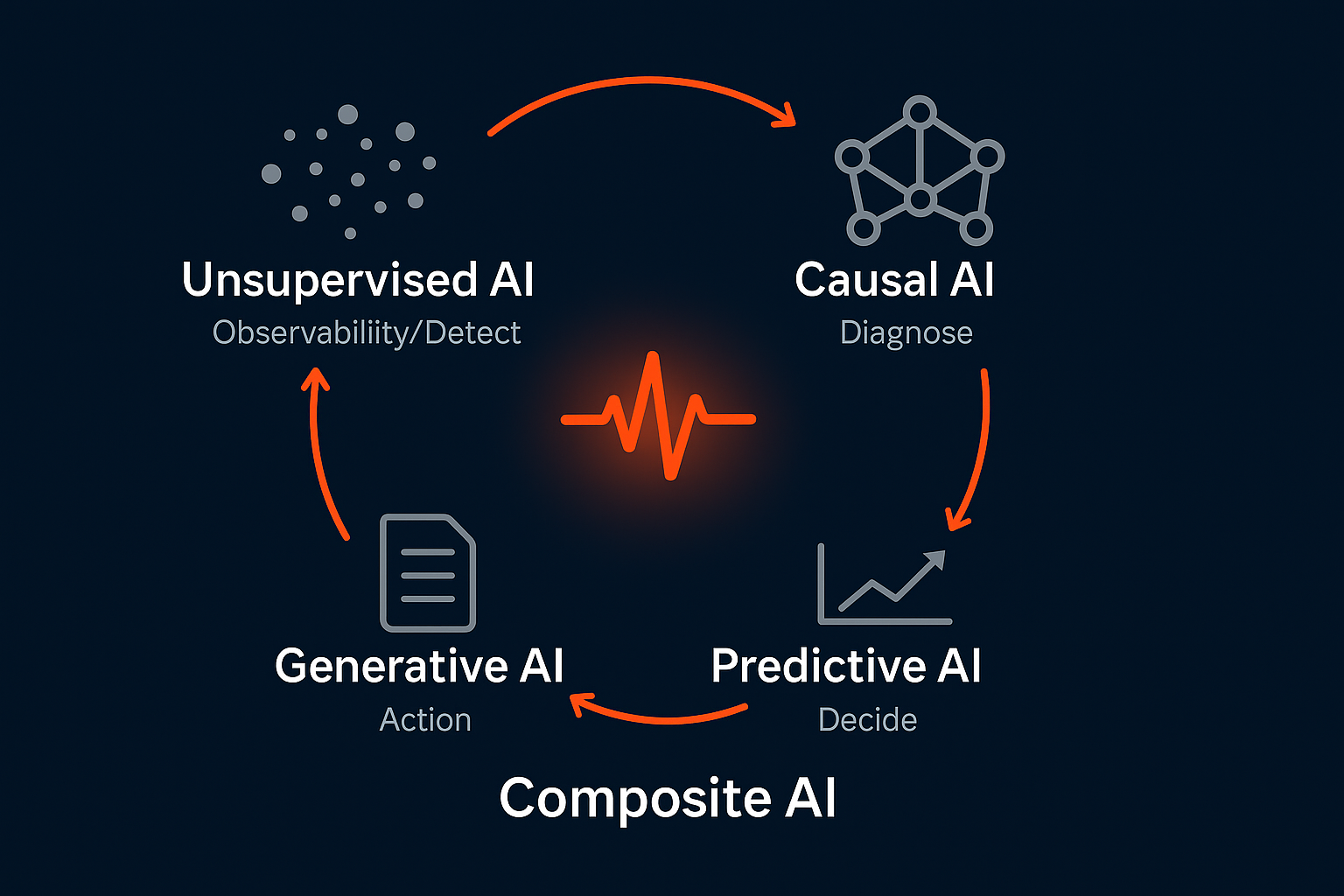

Composite AI integrates different AI techniques—unsupervised machine learning, predictive analytics, causal reasoning, and generative AI—to create a holistic intelligence layer that can observe, diagnose, decide, and improve continuously.

Instead of relying on a single model or algorithm, composite AI applies the right method to the right problem to solve a wider range of business problems in a more effective manner.

- Unsupervised AI detects unknown anomalies across multiple types of data and systems, without labeling or defined thresholds.

- Predictive AI anticipates likely failures and drift, allowing you to fix them proactively.

- Causal AI identifies the true sources of degradation or error, so that you don’t just know a problem exists; you also know exactly where it is and why it happened.

- Generative AI communicates findings, generates summaries, and even proposes fixes by interpreting accurate data from across multiple sources.

Together, these AI methods create an intelligent AI agent that doesn’t just trigger an alert or turn a dashboard red. It also explains what happened, why it happened, what to do next, and how to prevent it in the future.

Why GenAI Alone Can’t Ensure Reliability

Generative AI excels at information summary and natural language generation, but it was never designed for anomaly detection and predictive analytics. When used as the sole “brain” for observability or evaluation, it introduces three major risks:

1. Probabilistic Inference Errors

LLMs are probabilistic models with opaque decision paths. Wrapping them around enterprise monitoring pipelines means trusting one model’s output as the truth. When that model misinterprets a signal, the entire diagnostic chain fails.

2. Lack of Grounded Causality

Generative AI infers likely answers based on linguistic probability, not empirical data. It can summarize log data or metrics but struggles to establish causal relationships across distributed systems, pipelines, and model components. It’s a very effective tool, but Generative AI alone isn’t the best approach to causal inference.

3. Reactive, Not Preventive Behavior

Most LLM-driven observability tools react to issues after they occur. They describe symptoms in natural language but rarely offer reliable prevention or retraining mechanisms.

Relying solely on generative AI to check the quality of your generative AI stack is circular logic. It’s like asking a mirror for diagnosis instead of using a microscope for analysis.

Composite AI as a Multidisciplinary Advantage

Composite AI breaks that monoculture. It embodies a multidisciplinary approach where no single technique dominates. Each AI discipline contributes its unique strength:

- Unsupervised AI models quantify and detect statistical deviation.

- Predictive AI models forecast drift or degradation before incidents occur.

- Causal AI models explain dependencies and identify true root causes.

- Generative AI makes insights user-friendly by summarizing and translating results into human context.

This structure builds diversity and resilience; two critical qualities in any reliability system. When one layer fails or produces uncertain data, another layer provides verification or context. It becomes a system of checks and balances.

Composite AI also delivers what pure generative AI can’t: fast, verifiable feedback loops. These loops connect runtime data with evaluation, diagnosis, and fine-tuning, allowing systems to learn from production behavior safely and automatically.

From Observability to AI Reliability

At InsightFinder, we’ve built an AI Reliability Platform around these principles. Whether you’re building apps on LLMs, deploying agentic workflows, or tuning small domain-specific models, we have you covered.

Composite AI is not a feature, it’s the foundation of our approach to building trust.

Our platform unifies observability, diagnosis, prediction, and governance to turn raw telemetry into actionable reliability intelligence. It’s designed for enterprises building AI systems that can not only act autonomously, but also do so safely, transparently, and continuously within policy guardrails.

Here’s how our composite approach works, using patented methods:

- Observability as a foundation – Unsupervised ML continuously learns the baseline behavior of applications, infrastructure, and AI models, detecting anomalies and drift in real time.

- Accurate and trainable diagnosis – Causal inference maps system dependencies, identifying the true source of anomalies rather than just alerting on symptoms.

- Proactive fixes – Predictive analytics are used to anticipate emerging reliability risks and we alert you before they impact your service.

- Clear actions – Generative AI translates complex findings into clear, actionable recommendations that help teams act faster with accuracy and confidence.

Each layer feeds into a feedback loop for fast improvements in your software development lifecycle: detect → diagnose → evaluate → retrain. Over time, that workflow is what extends beyond just observability and evals into fast, iterative, continuous improvement that enables you to deliver AI reliability.

Sovereignty and Safety by Design

One of the most overlooked dimensions of reliability is sovereignty: the ability to operate without dependency on public LLMs or third-party data pipelines.

Composite AI enables sovereignty by decoupling intelligence layers from any single commercial foundation model. InsightFinder’s architecture is sovereign by design, supporting air-gapped, on-premises deployment for regulated or sensitive environments (e.g., finance, healthcare, defense, etc).

This independence eliminates data exposure risks, mitigates cost volatility, and prevents vendor lock-in. It also removes external LLMs as single points of failure, reinforcing trust and continuity.

In other words: reliability isn’t just about uptime. It’s about control.

Why Now: The Shift from Chat to Action

The market shift is already underway. AI systems are moving beyond chat interfaces toward agentic AI; systems that act on behalf of users, trigger workflows, and make operational decisions. As these agentic systems gain more autonomy, the demand for reliability, safety, and governance increases exponentially.

To better guide those systems, small language models (SLMs) and domain-specific models are becoming the enterprise standard. They require rapid iteration, fine-grained control, and fast iterative learning: all capabilities native to InsightFinder’s composite AI foundation.

Observability alone can’t meet these requirements. Neither can other standalone tools like evals or gateways. Teams need complete platforms that can detect, diagnose, evaluate, and improve continuously. Composite AI within those platforms is the architecture that makes that viable for delivering reliability.

That reliability depends on three principles:

- Multidisciplinary Intelligence: combining unsupervised, predictive, causal, and generative AI in one cohesive system.

- Fast-Feeback Improvement: continuously learning from live outcomes to fine-tune models and policies.

- Sovereign Control: maintaining operational independence, privacy, and predictability in every deployment.

This is how InsightFinder turns observability into actionable reliability—and how we help enterprises build AI agents they can trust with customers.

Deliver AI You Can Trust

Point solutions focused on observability, eval systems, policies, or guardrails are a good start toward reliability. Holistic platforms that enable end-to-end workflows are more effective. A key component within those holistic workflows is Composite AI.

Multidisciplinary techniques are the foundation for reliable and resilient AI systems. By unifying detection, diagnosis, prediction, and improvement under one framework that utilizes a variety of approaches best-suited to their individual tasks, every signal becomes an opportunity for safer, smarter action.

Generative AI may have kick-started the AI revolution, but composite AI is what will sustain it by making autonomous systems trustworthy, verifiable, and self-improving.

Want to learn more?

Sign up for a free trial or request demo session with our team.