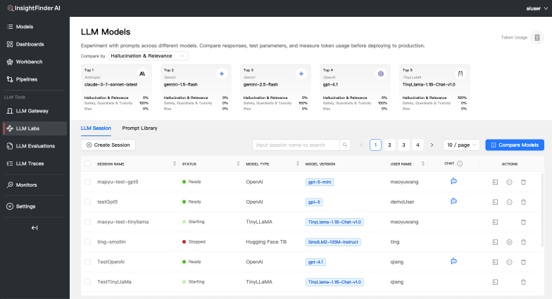

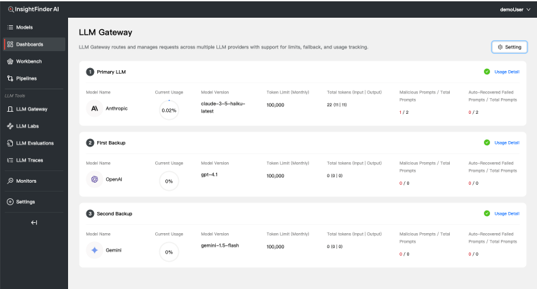

Complete lifecycle management for teams building and running LLM and ML models. InsightFinder provides an end-to-end AI Observability Platform that helps teams build, deploy, govern, and maintain trustworthy AI systems in production; while controlling cost and improving reliability.

Data scientists, data engineers, ML engineers, AI platform engineers, and Chief AI Officers use InsightFinder to deliver high-performing, stable, and predictable LLM and ML applications at scale.