InsightFinder AI & Observability Blog

Filter posts by category

The AI Reliability Problem: How to Detect and Prevent System Failures Early

AI systems fail more often than engineering teams expect, and they often fail without…

Read more

Operational AI in Telecom: Helen Gu on Building Predictive, Reliable Networks

Building Predictive, Reliable Networks At the SCTE Connect Panel on AI & Connectivity, held…

Read more

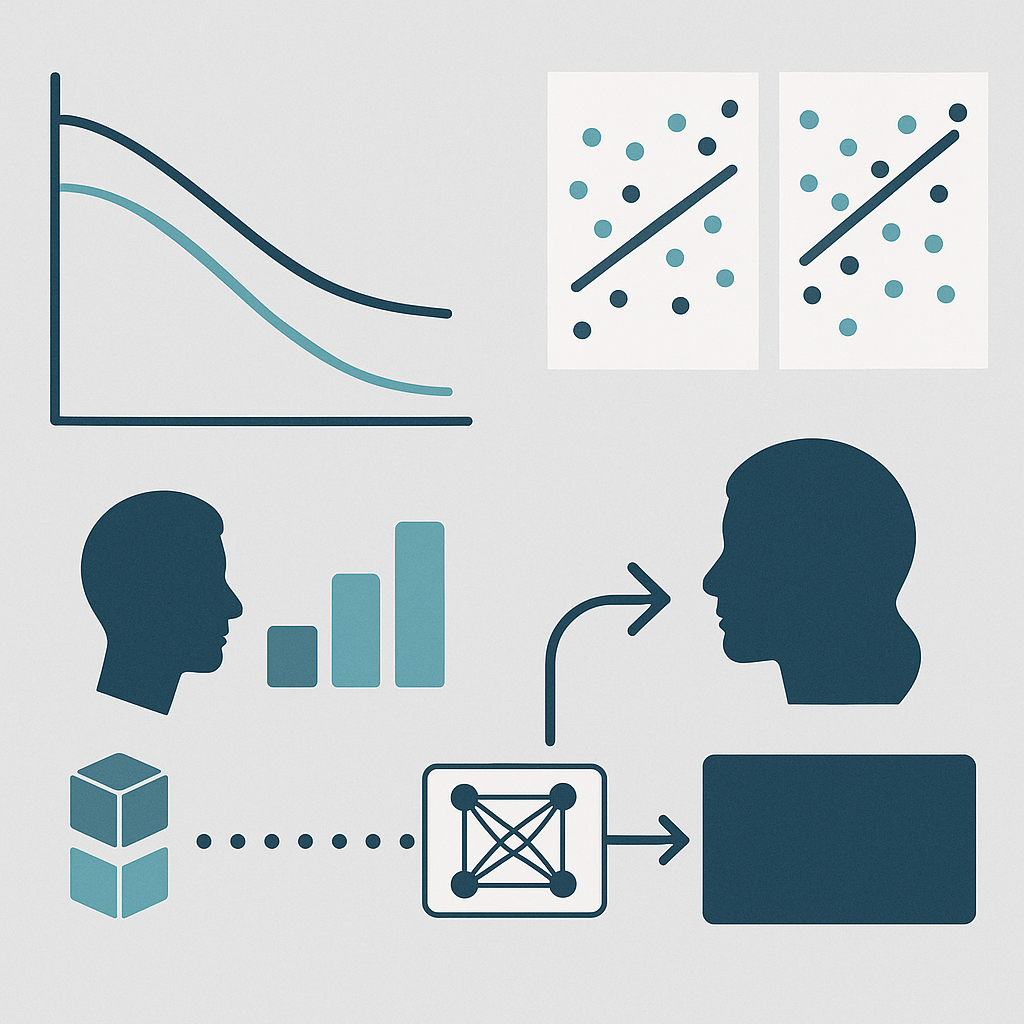

Understanding Model Drift: Types, Causes, and How to Detect it Before Accuracy Drops

AI models rarely maintain peak accuracy indefinitely. Whether deploying classic machine-learning models or state-of-the-art…

Read more

Building a Model Monitoring Framework for Reliable AI Systems

AI systems rarely fail in a dramatic, single event. In most production environments, reliability…

Read more

Why Predictive Analytics Is Critical for Cloud Infrastructure Monitoring

Modern cloud infrastructure is a complex, rapidly changing ecosystem utilizing microservices, containers, distributed storage,…

Read more

A Practitioner’s Guide to AIOps, MLOps, and LLMOps

You’re likely here because you’re trying to figure out how to deploy, monitor, and…

Read more

Proactive Reliability: How Predictive Observability Reduces Outages Through Early Detection

Most organizations still learn about system issues only after performance declines or customers begin…

Read more

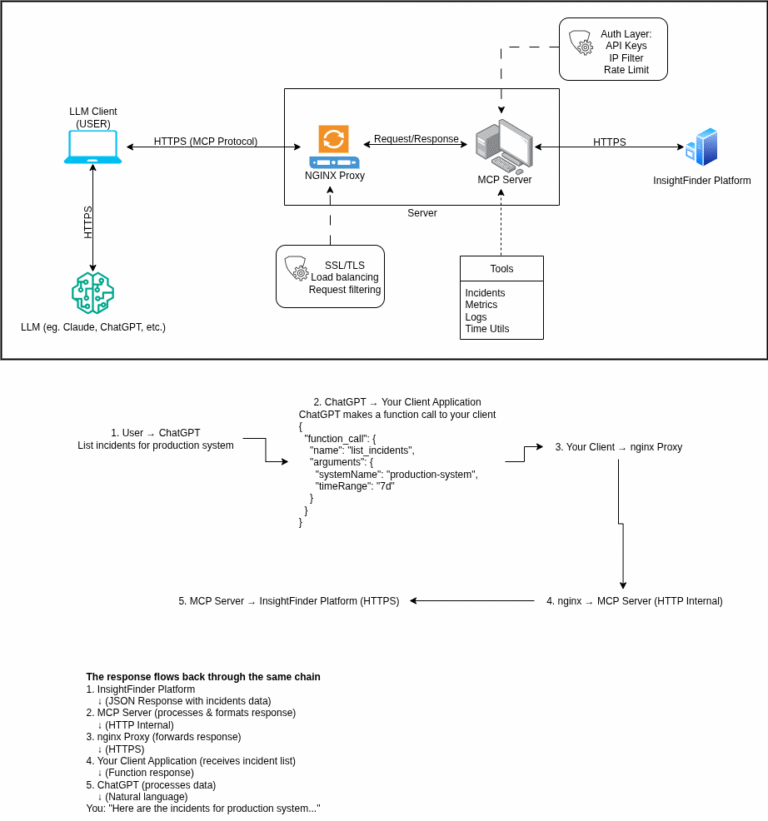

How to Harden Your MCP Server

Model Context Protocol, or MCP, servers have seemingly become the new API server, with…

Read more

AI Observability Tools 2025: Platform Comparison Guide for ML and LLM Reliability

Imagine this: your chatbot’s performance has been declining for weeks, producing generic responses due…

Read more

Key Metrics for Measuring AI Observability Performance

As AI-driven systems, LLM workloads, and distributed architectures expand in scale and complexity, the…

Read more

5 Common Observability Pitfalls and How Predictive Analytics Solves Them

Many engineering teams have invested heavily in observability platforms, yet the same operational problems…

Read more

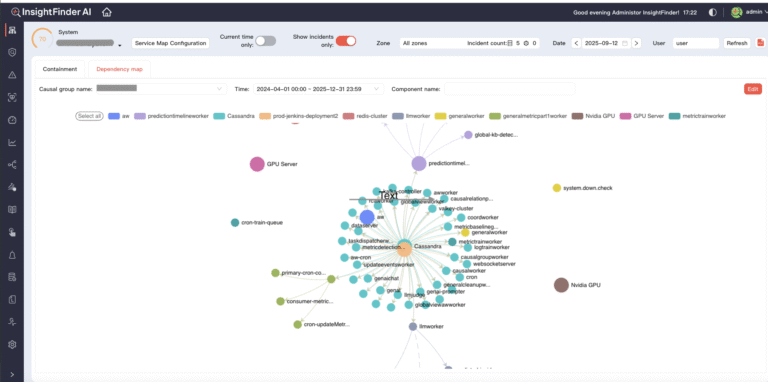

Announcing InsightFinder’s Dependency Graph: A New Way to Ensure Service Reliability

Modern applications are built on hundreds of interconnected services. While this architecture drives speed…

Read more

Introducing InsightFinder’s LLM Gateway: A Unified Layer for Reliable, Secure, and Observable AI

LLM adoption has moved faster than the infrastructure supporting it. Teams are rolling out…

Read more

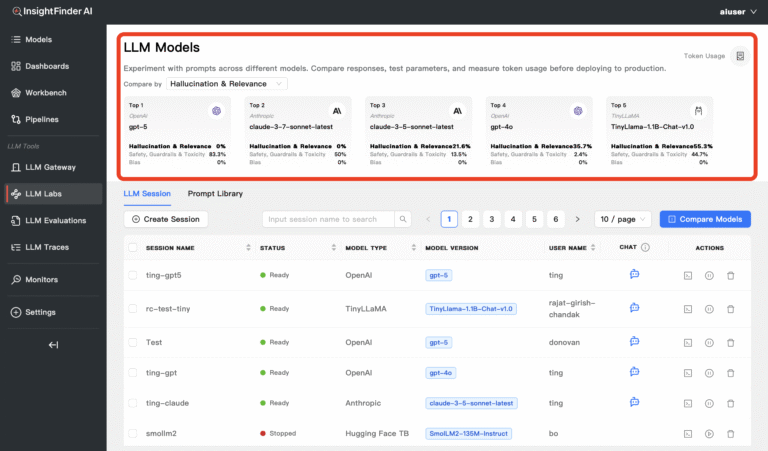

LLM Labs: Faster Evaluations for Large Language Models

Choosing the right large language model (LLM) for your application has never been more…

Read more

InsightFinder MCP Server: A New Gateway Between AI and Observability

Today, we’re announcing the general availability of InsightFinder’s new MCP (Model Context Protocol) server….

Read moreExplore InsightFinder AI