InsightFinder AI & Observability Blog

Filter posts by category

OTel me more about using InsightFinder

Teams often presume that trying a new AI observability tool means re-instrumenting code, swapping…

Read more

Introducing ARI: InsightFinder’s New Operational Reliability AI Agent

Production reliability is hitting a breaking point. As systems become more distributed and deployments…

Read more

InsightFinder’s Patent for Automated Incident Prevention is Granted

InsightFinder has been granted its automation patent which completes its unique closed-loop reliability platform…

Read more

AI Agents: The New Path Forward and How Reliability Catches Up

AI applications are shifting from “answer engines” to “action engines.” The moment an AI…

Read more

How to Monitor AI Agents: Reliability Challenges and Observability Best Practices

AI agents represent a shift in how language models are used in production. Unlike…

Read more

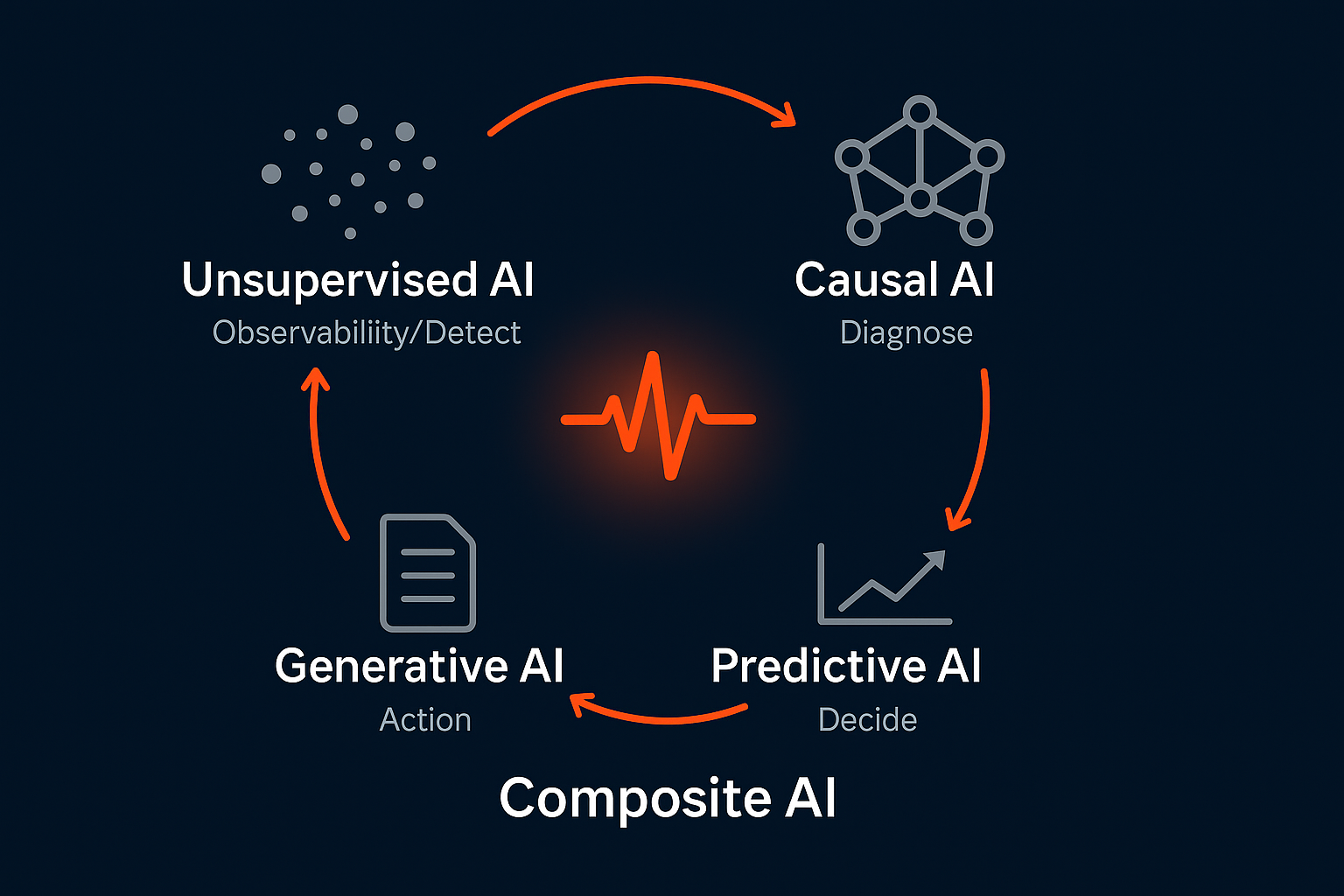

Composite AI for IT Observability: Why Generative AI Alone Is Not Enough

Composite AI refers to the use of multiple AI techniques, such as unsupervised learning,…

Read more

What Is Composite AI? A Practical Guide to Reliable and Trustworthy AI Systems

InsightFinder’s Composite AI blends unsupervised ML, predictive drift modeling, causal dependency mapping, and GenAI summaries into one cohesive reliability engine.

Read more

Debugging Faster with Distributed Traces in InsightFinder AI Observability Platform

With AI applications, any single request can fan out into session state checks, prompt…

Read more

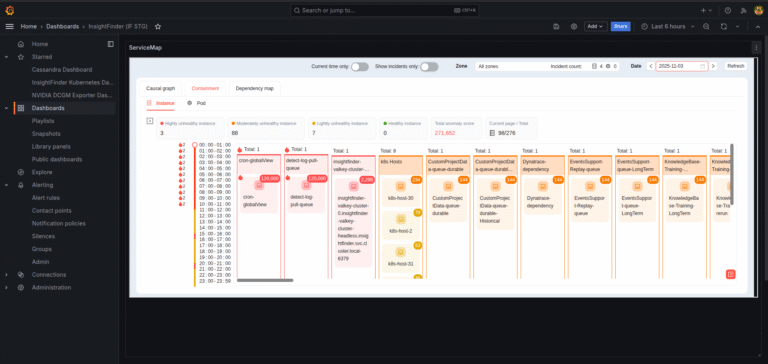

Insights for Grafana: From Correlation to Causation with InsightFinder AI

Grafana, the open platform engineers trust to visualize metrics, logs, and traces across distributed…

Read more

Infrastructure Signals Every AI Team Should Monitor to Prevent Outages

AI outages rarely begin as dramatic failures. They tend to emerge quietly, shaped by…

Read more

Hallucination Root Cause Analysis: How to Diagnose and Prevent LLM Failure Modes

The prevalent view treats LLM hallucinations as unpredictable, sudden failures—a reliable system unexpectedly generating…

Read more

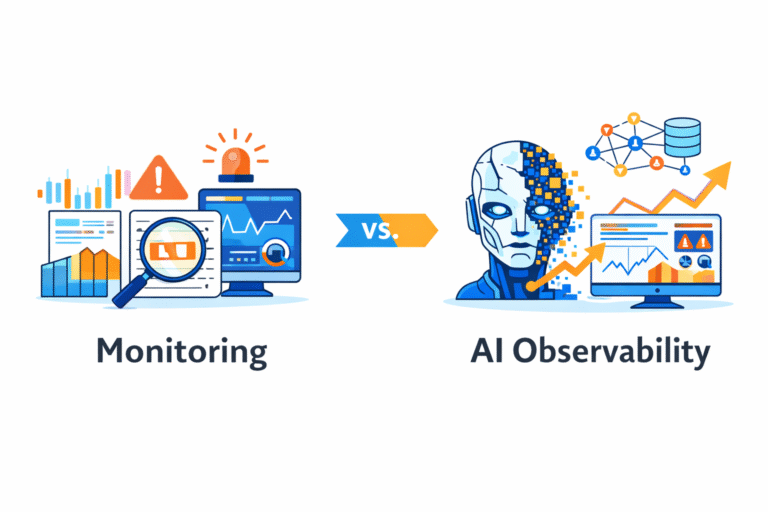

AI Observability vs Monitoring: Key Differences and When Each Approach Matters

Many engineering teams still use the terms “monitoring” and “observability” interchangeably. At first glance,…

Read more

Generative AI Observability: Ensuring Accuracy and Reducing Hallucinations

Generative AI has reached the point where powerful models are widely available, yet reliability…

Read more

Why Do LLMs Hallucinate? How Observability Tools Can Help Detect It

Large language models have moved quickly from experimentation to production. They now sit behind…

Read more

The Hidden Cost of LLM Drift: How to Detect Subtle Shifts Before Quality Drops

Large language model drift rarely announces itself. In most production systems, the model continues…

Read moreExplore InsightFinder AI