AI models rarely maintain peak accuracy indefinitely. Whether deploying classic machine-learning models or state-of-the-art large language models (LLMs), performance naturally erodes as the environment around them changes. Data evolves, user behavior shifts, upstream systems fluctuate, and hidden patterns gradually diverge from the assumptions embedded in the model during training.

This phenomenon, known as model drift, represents one of the most persistent and costly challenges in real-world AI systems. Drift can manifest as subtle accuracy degradation, unreliable predictions, flawed automation, and downstream errors that disrupt user experiences and business operations.

Understanding the types of drift, the factors that trigger it, and the signals indicating early degradation is essential for sustaining model reliability. This article examines the key forms of model drift, why they occur, and strategies for detecting them before performance declines and user trust erodes.

What Is Model Drift?

How Models Change Over Time

Models are not static. Even in the absence of explicit errors, predictions may slowly become less reliable as the underlying patterns in data shift. This is because a model’s assumptions are based on historical data distributions that may no longer reflect current reality. Over time, these subtle shifts accumulate, introducing discrepancies between expected and actual outcomes.

Why Drift Happens Even Without Data Errors

Drift does not require corrupted or missing data. Models can degrade simply due to the natural evolution of behavior, context, or system conditions. For instance, seasonal trends, emerging user preferences, or updated business rules can gradually invalidate previously accurate patterns, leading to silent performance decay.

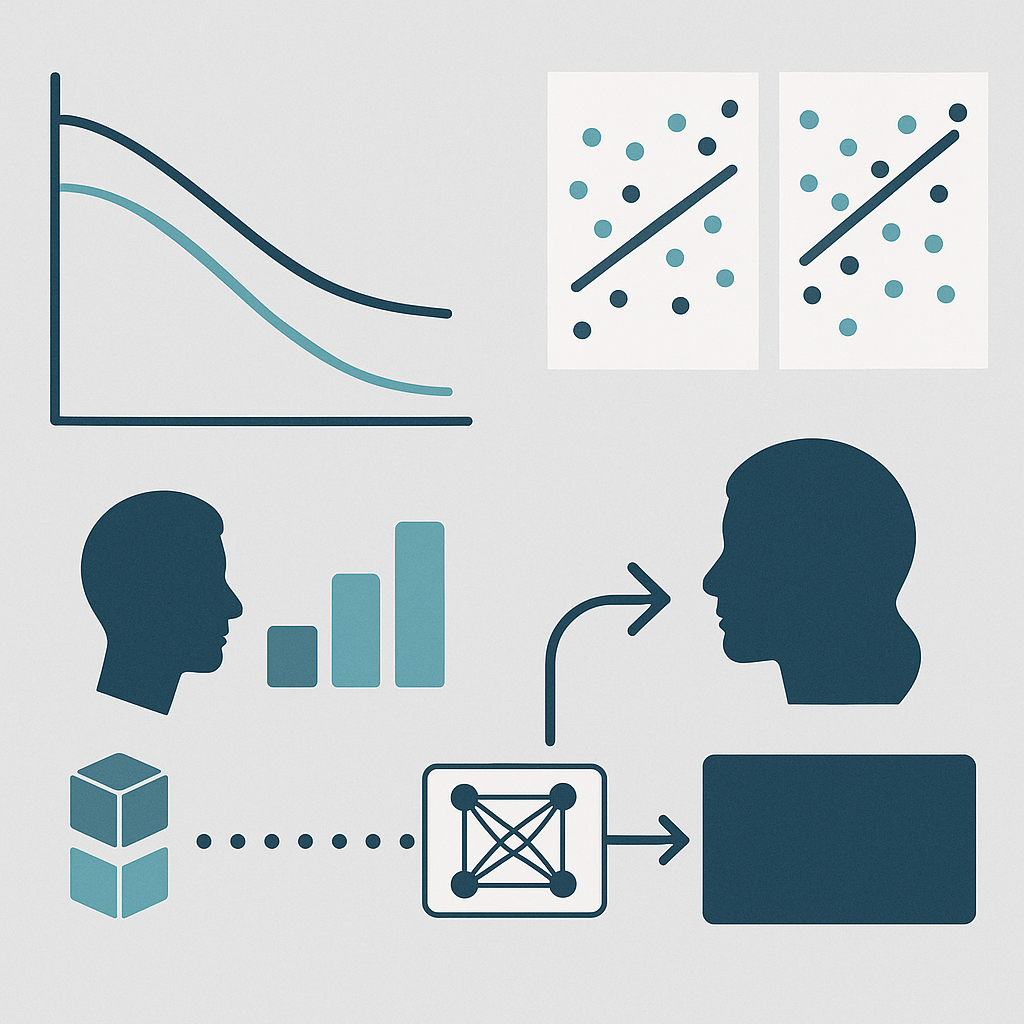

The Major Types of Model Drift

Data Drift (Input Distribution Shifts)

Data drift occurs when the statistical properties of incoming inputs differ from the training data. A retail demand forecasting model, for example, may see changed purchase patterns after a new competitor enters the market. While the model continues producing outputs, the predictions become less representative of the current state.

Concept Drift (Labels/Relationships Change)

Concept drift arises when the relationship between inputs and the target variable changes. Credit scoring models may experience this when economic conditions alter the risk profiles of applicants. Concept drift is particularly challenging because it undermines the fundamental assumptions of the model, often requiring retraining or adaptive learning approaches.

Prediction Drift (Output Quality Degrades)

Prediction drift refers to observable degradation in the quality of model outputs, even when input distributions remain stable. This often signals underlying changes in feature relevance or latent relationships, manifesting as lower accuracy, higher error rates, or inconsistent outputs across similar cases.

Model Behavior Drift in LLMs

A subtle yet often overlooked form of drift occurs in large language models. Over time, LLMs can generate outputs that gradually deviate in style, factual accuracy, or alignment with user expectations; a phenomenon sometimes called behavioral drift. This drift is influenced not only by input distribution changes but also by evolving prompt use, system updates, and cumulative exposure to real-world interactions.

Common Causes of Model Drift

Evolving User Behavior

Shifts in how users interact with systems are a primary driver of drift. Even minor behavioral changes can accumulate, causing models to misinterpret new patterns or undervalue emerging features.

Environmental or Seasonal Changes

Seasonal cycles, market fluctuations, and environmental factors can temporarily or permanently alter input patterns. Predictive models must adapt to these cyclical variations to maintain accuracy.

Dependency Shifts (APIs, Upstream Systems)

Many models rely on external data sources, APIs, or integrated systems. Updates, schema changes, or data inconsistencies in these dependencies can trigger drift without any changes in the core model itself.

Model Aging and Stale Parameters

Static model parameters become increasingly misaligned with current conditions over time. Without periodic retraining or fine-tuning, even well-performing models will exhibit performance decay.

Prompt Drift and LLM Degradation

In LLM deployments, variations in prompts and contextual framing can slowly erode output quality. Slight shifts in phrasing, context, or usage patterns introduce behavior changes that are invisible without careful monitoring.

Signs Your Model Is Experiencing Drift

Gradual Accuracy Decay

One of the earliest indicators of drift is a slow decline in predictive accuracy. Even modest drops should be treated as a warning sign, as they can presage larger failures.

Spikes in Anomaly Rates

Sudden increases in error rates, misclassifications, or unexpected outputs often signal that the model’s assumptions no longer match reality.

Changing Model Behavior Patterns

Monitoring model responses over time may reveal shifts in behavior, such as altered ranking orders, inconsistent recommendations, or style deviations in LLM outputs.

Misalignment Between Predicted vs Expected Outputs

When predictions consistently diverge from expected outcomes, especially in edge cases or newly emerging scenarios, it often indicates underlying drift.

How to Detect Drift Before Performance Drops

Statistical Methods (KS Tests, PSI, Chi-Square)

Classical statistical tools such as Kolmogorov-Smirnov tests, Population Stability Index, and chi-square tests quantify shifts in input distributions or feature importance, enabling early detection of drift.

Real-Time Anomaly Detection

Integrating anomaly detection into prediction pipelines enables teams to detect deviations as they occur, rather than relying on periodic assessments that may miss subtle trends.

Monitoring Model Embeddings or Latent Space Behavior

For complex models, tracking changes in embeddings or latent space representations can reveal early signs of misalignment before outputs are affected.

Behavior Modeling Across Distributed Systems

In large-scale deployments, drift can propagate through interconnected systems. Observing model behavior holistically across distributed systems helps identify upstream causes of degradation.

Continuous Monitoring vs. Periodic Evaluation

Continuous monitoring provides a more timely view of drift trends than periodic checks. By maintaining real-time visibility into model performance, teams can respond to drift before it impacts users.

Best Practices for Preventing Severe Model Drift

Automating Drift Alerts

Automated alerting ensures that teams are notified immediately when drift indicators exceed defined thresholds, enabling faster response and mitigation.

Retraining Strategies

Proactive retraining using recent data, incremental updates, or adaptive learning techniques helps models remain aligned with evolving patterns.

Monitoring Upstream Data Quality

Ensuring consistent data quality from all inputs and dependencies reduces the likelihood of drift caused by external changes, reinforcing model reliability.

Early Drift Detection Protects Performance and User Trust

Model drift is an inevitable challenge for all AI systems, but early detection and continuous monitoring can prevent small deviations from cascading into serious performance failures. By understanding the types, causes, and signals of drift, organizations can implement proactive measures that preserve accuracy, reliability, and user trust. Modern AI observability platforms, such as InsightFinder, provide the tools necessary to detect drift in real time and maintain confidence in model outputs.