Welcome to InsightFinder AI Observability Docs!

Categories

Traceviewer

LLM Traces Page is a powerful and intuitive tool designed to help users understand anomalous behavior in your LLM and ML models. Powered by InsightFinder’s advanced anomaly detection engine, the LLM Traces Page automatically identifies and surfaces unusual patterns, performance issues, and problematic behaviors in AI systems and Models. Whether you’re a data scientist investigating model degradation, a developer troubleshooting unexpected AI agent responses, a DevOps engineer monitoring ML system health, or a team lead ensuring reliable AI service performance, AW LLm Traces Page gives you focused visibility to improve efficiently.

What are LLM Traces?

An LLM trace is a timeline of events that occur when a large language model processes a request or generates a response.

Every trace is made up of spans, which represent individual operations or steps within that process. For example, when a user submits a prompt, you might have spans for prompt parsing, model tokenization, retrieving context or embeddings, running the forward pass through the model, applying safety or relevance checks, and streaming the generated tokens back to the user. Each span includes details such as when it started, how long it took, and what computations or interactions occurred during that stage.

LLM traces can capture many different types of activities. Model traces show the flow of input through tokenization, inference, and generation. System traces record lower-level operations like GPU kernel executions, memory transfers, or API calls to external tools. In multi-component or agentic LLM systems, traces can follow a request across different steps such as retrieval, reasoning, tool use, and response synthesis—providing end-to-end visibility into how the model and supporting infrastructure handled the request.

Who can use Traceviewer?

AW LLM Traces page can universally be used by any role/profile and can provide a unique view and insights into application performance depending on the use case. Some examples of use cases:

- Data Scientists use AW LLM Traces to quickly identify when their LLM and ML models are behaving unexpectedly. Rather than manually sifting through thousands of normal traces, they can focus on the anomalous ones that indicate model drift, performance degradation, or data quality issues. This targeted approach helps them validate experimental results, detect when models need retraining, and understand the root causes of unexpected model outputs

- DevOps and Site Reliability Engineers use AW LLM Traces to proactively monitor AI system health by focusing on anomalous patterns rather than normal operations. The tool highlights unusual service interactions, performance spikes, and error patterns across distributed ML and LLM systems, making it easier to prevent incidents before they impact users and reduce downtime in case of incidents.

- System Administrators appreciate AW LLM Traces Page’s ability to automatically flag resource utilization anomalies and unusual performance patterns. When users report issues, administrators can immediately see if there are corresponding anomalous traces related to infrastructure problems, model inference delays, or system bottlenecks

Trace Ingestion

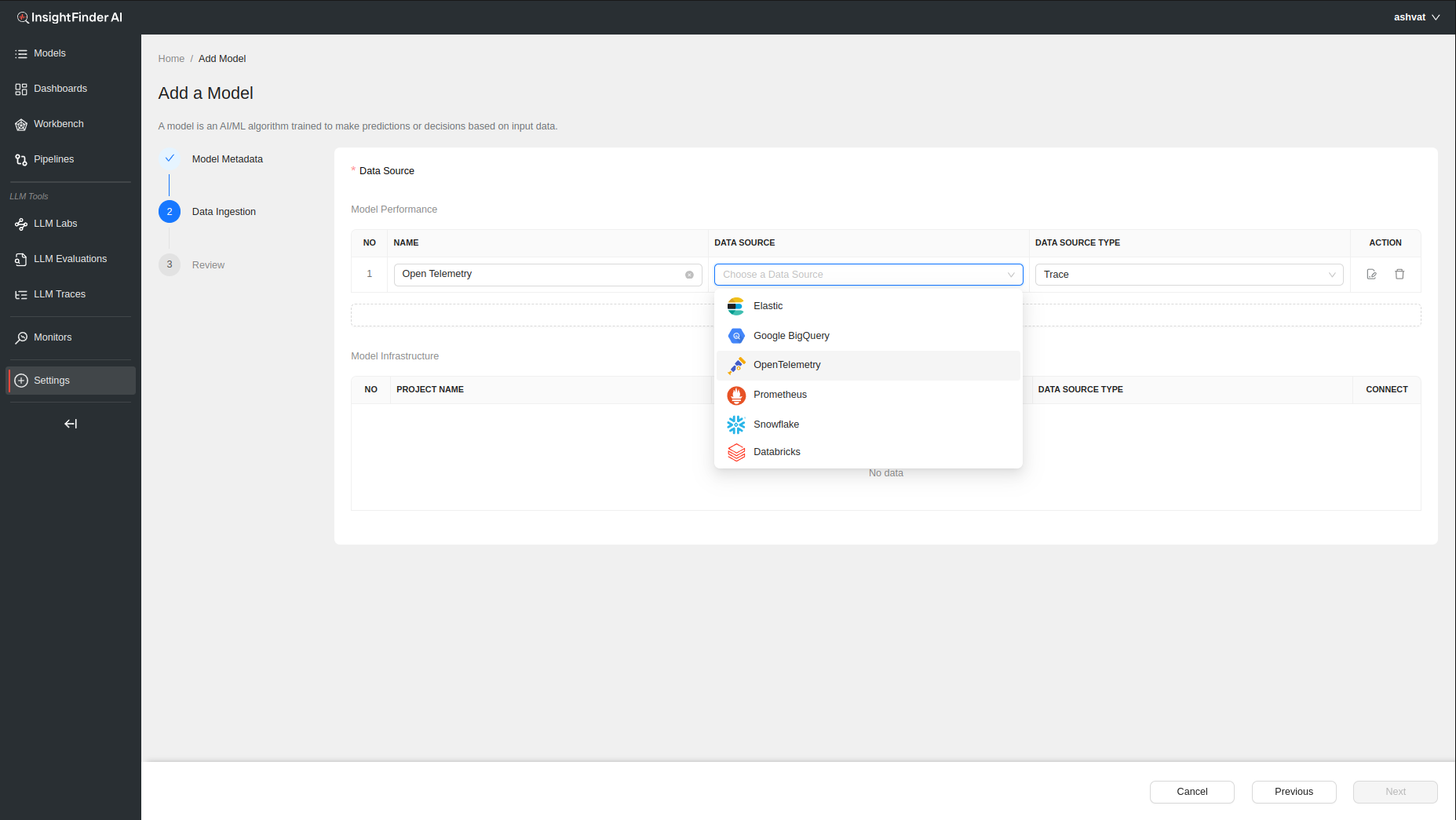

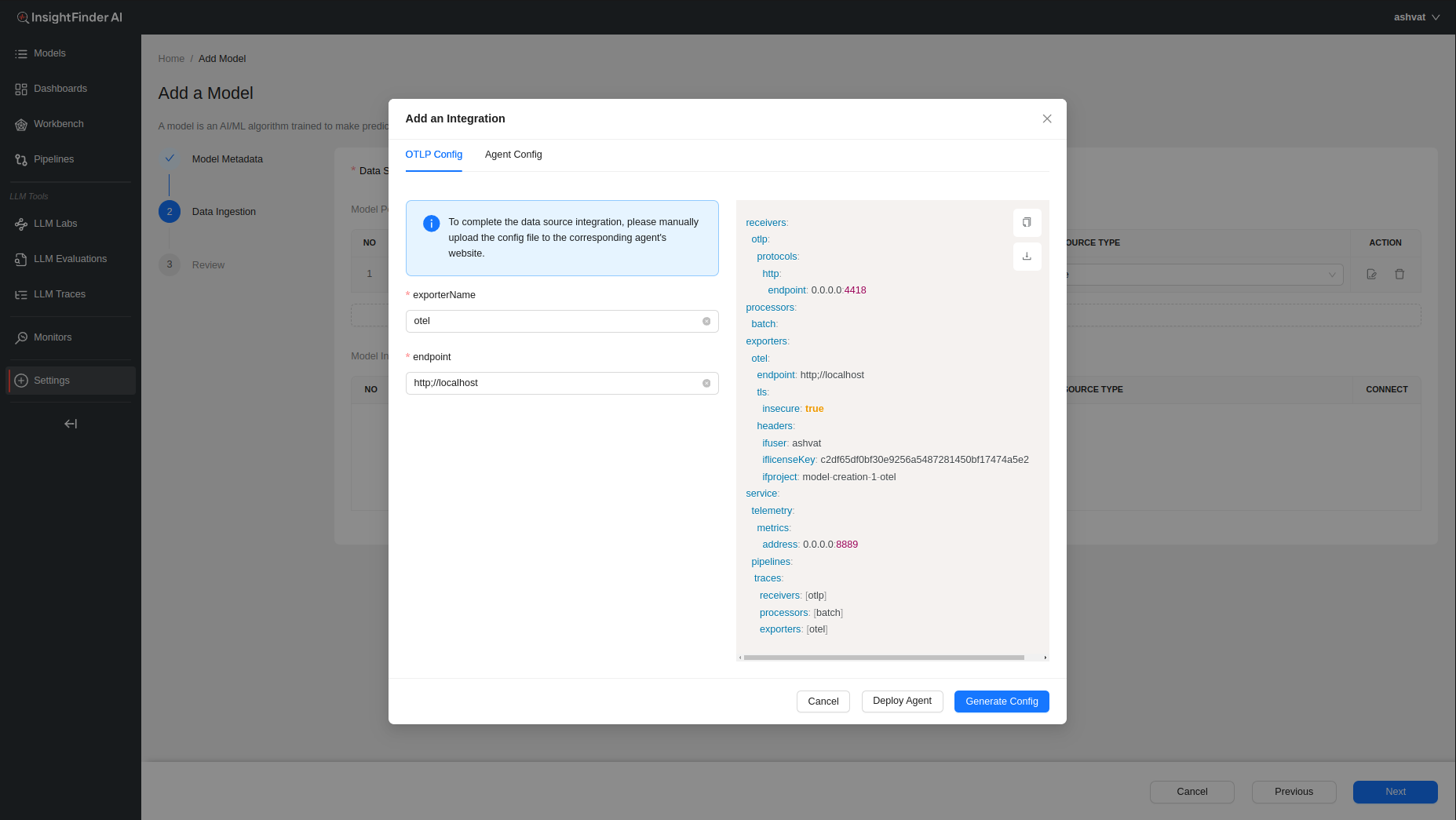

When creating a model in InsightFinder AW, need to have at least 1 data type as ‘Trace’ to ingest traces and perform Trace Analysis on the ingested data.

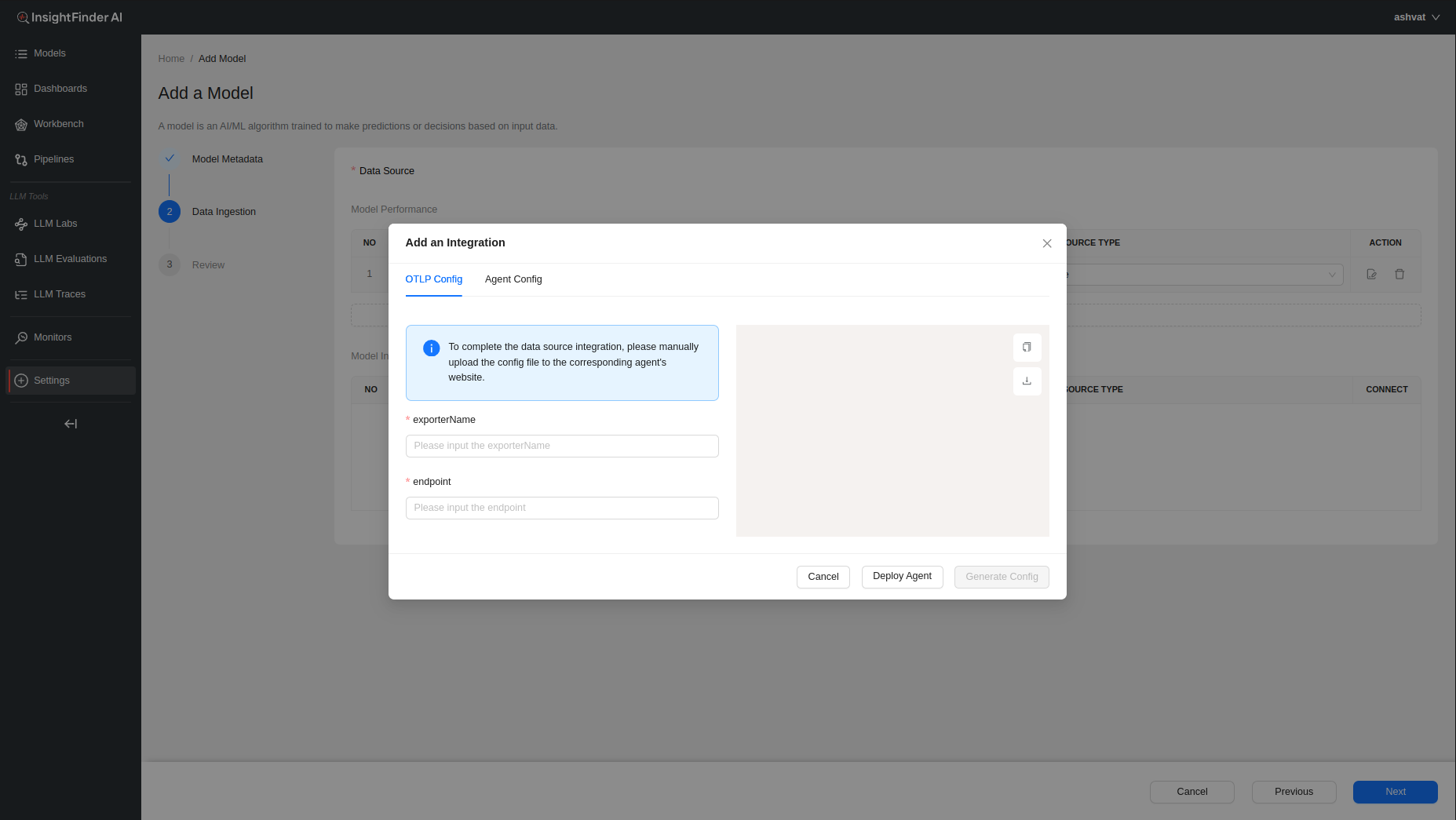

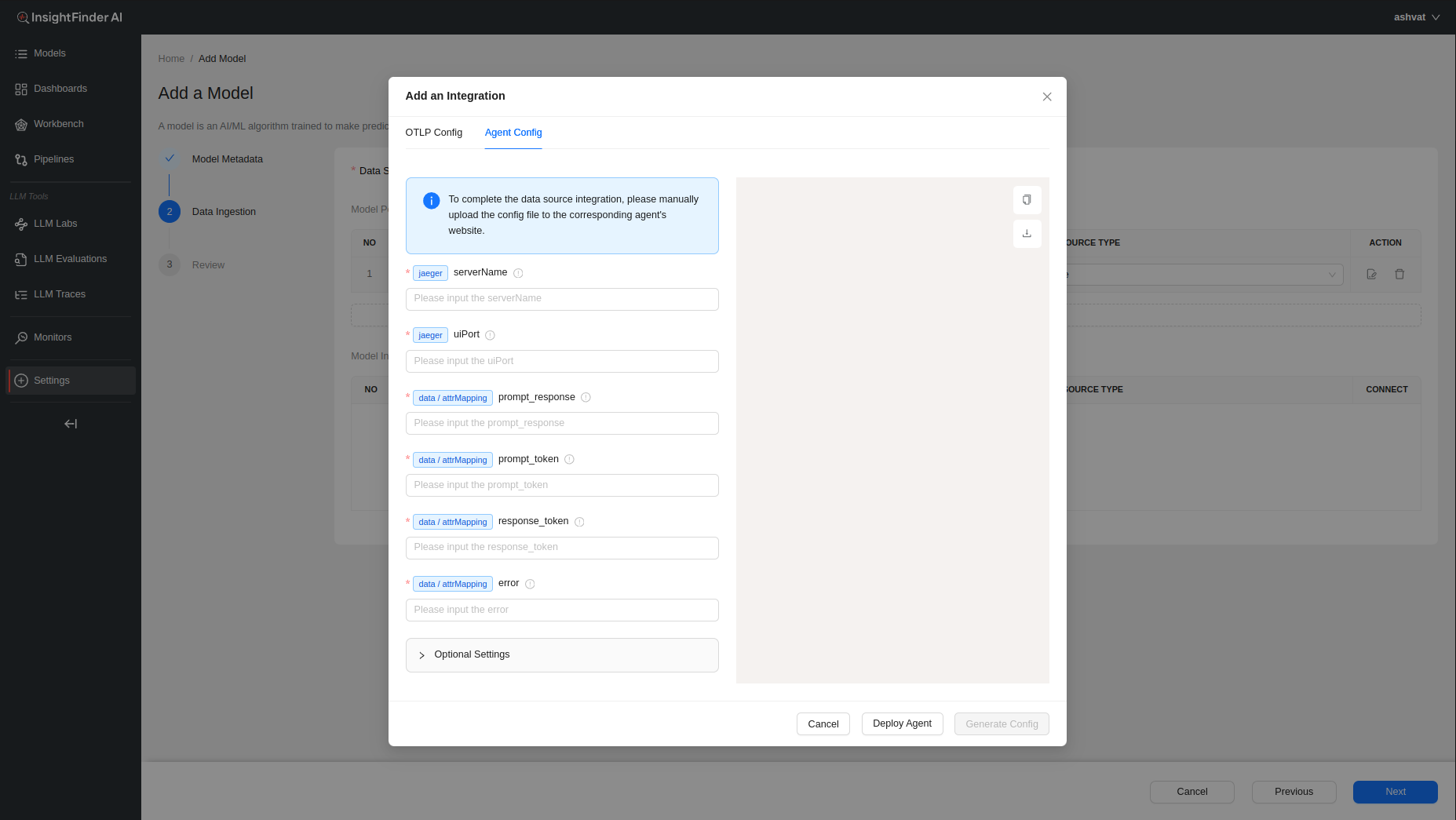

After choosing the Data Source (OpenTelemetry in this example), a pop up window will allow the users to enter all the endpoint and credentials information that will be needed to configure InsightFinder’s trace collector(s) as well as any additional configuration that might be required.

To learn more information about configuring the collector agents, users can click on the ‘Deploy Agent’ button at the bottom which will redirect the users to the ReadMe documentation for the specific data collectors.

Once the required fields are filled, users can click on the ‘Generate Config’ button that becomes clickable to have InsightFinder generate the configurations required to set up streaming.

If need more information on Model Creation, please refer to the <Model-Creation-document-link>

Iftracer-sdk

The iftracer-sdk is InsightFinder’s Python SDK built on top of OpenTelemetry that empowers developers to monitor and debug Large Language Model (LLM) executions with minimal intrusion. It enables distributed tracing of prompt calls, LLM responses, vector database interactions, and more—while integrating smoothly with your existing observability stack (e.g., Datadog, Honeycomb, etc.)

Setup and Quick Start

- Install the SDK (via PyPI):

- Initialize in your code, typically at entry point (e.g., __init__.py):

from iftracer.sdk import IftracerIftracer.init(

api_endpoint=”https://otlp.insightfinder.com”,

iftracer_user=”YOUR_USER”,

iftracer_license_key=”YOUR_LICENSE_KEY”,

iftracer_project=”YOUR_TRACE_PROJECT”,

)

- Instrument functions using decorators to capture tracing spans automatically:

from iftracer.sdk.decorators import workflow, task@workflow(name=”my_workflow”)

def my_workflow(…):

…

@task(name=”my_task”)

def my_task(…):

…

- Choosing Between Iftracer Decorators

- Use @aworkflow, @atask over an asynchronous function. Use @workflow, @task over a synchronous function.

- Use @aworkflow or @workflow when the function calls multiple tasks or workflows and combines their results, when the function is a high-level orchestration of a process, when you need to get more tags, or when you intend to create a logical boundary for a workflow execution. Otherwise, use @atask or @task.

Once configured, each decorated function generates trace data—including LLM model names, embedding model details, and RAG datasets as tags—allowing for full-stack observability and real-time analytics via the InsightFinder platform

InsightFinder LLM Labs Trace Projects

When using InsightFinder LLM Labs, a dedicated trace project/model is automatically created and populated with your LLM traces. This eliminates the need for manual project setup—every LLM request, response, and intermediate step is automatically ingested into the trace model.

The resulting project in InsightFinder provides:

- End-to-end visibility of prompt execution and downstream dependencies.

- Automatic correlation of LLM spans with context such as datasets, model type, and evaluation results.

- Real-time monitoring of performance, failures, and safety issues.

This means developers can start analyzing and troubleshooting LLM workflows immediately, without extra configuration.

InsightFinder Trace Analysis

LLM Traces Page

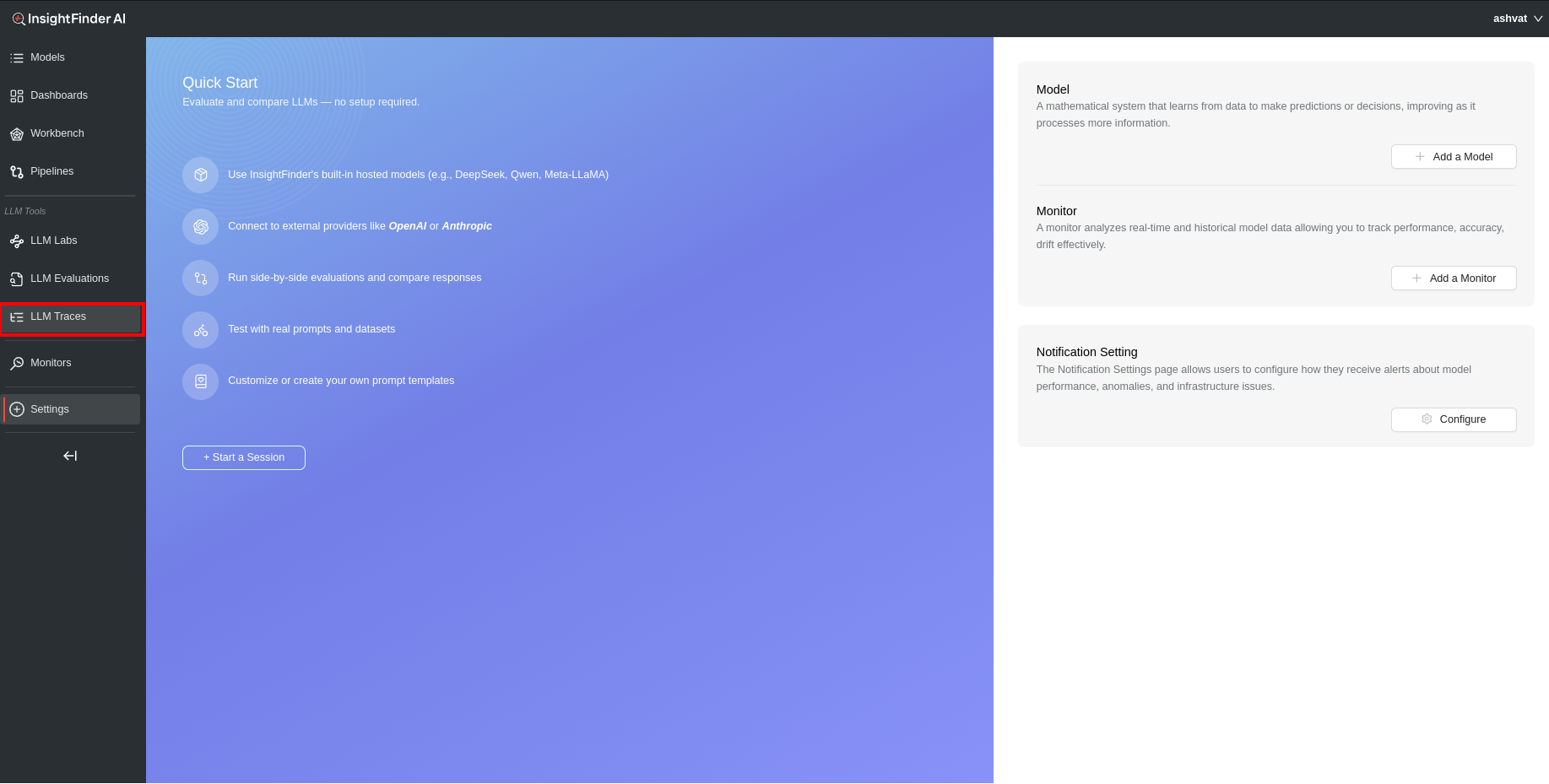

From anywhere on the InsightFinder AW UI, click on the LLM Traces on the side navigation bar to go to the AW LLM Traces Page.

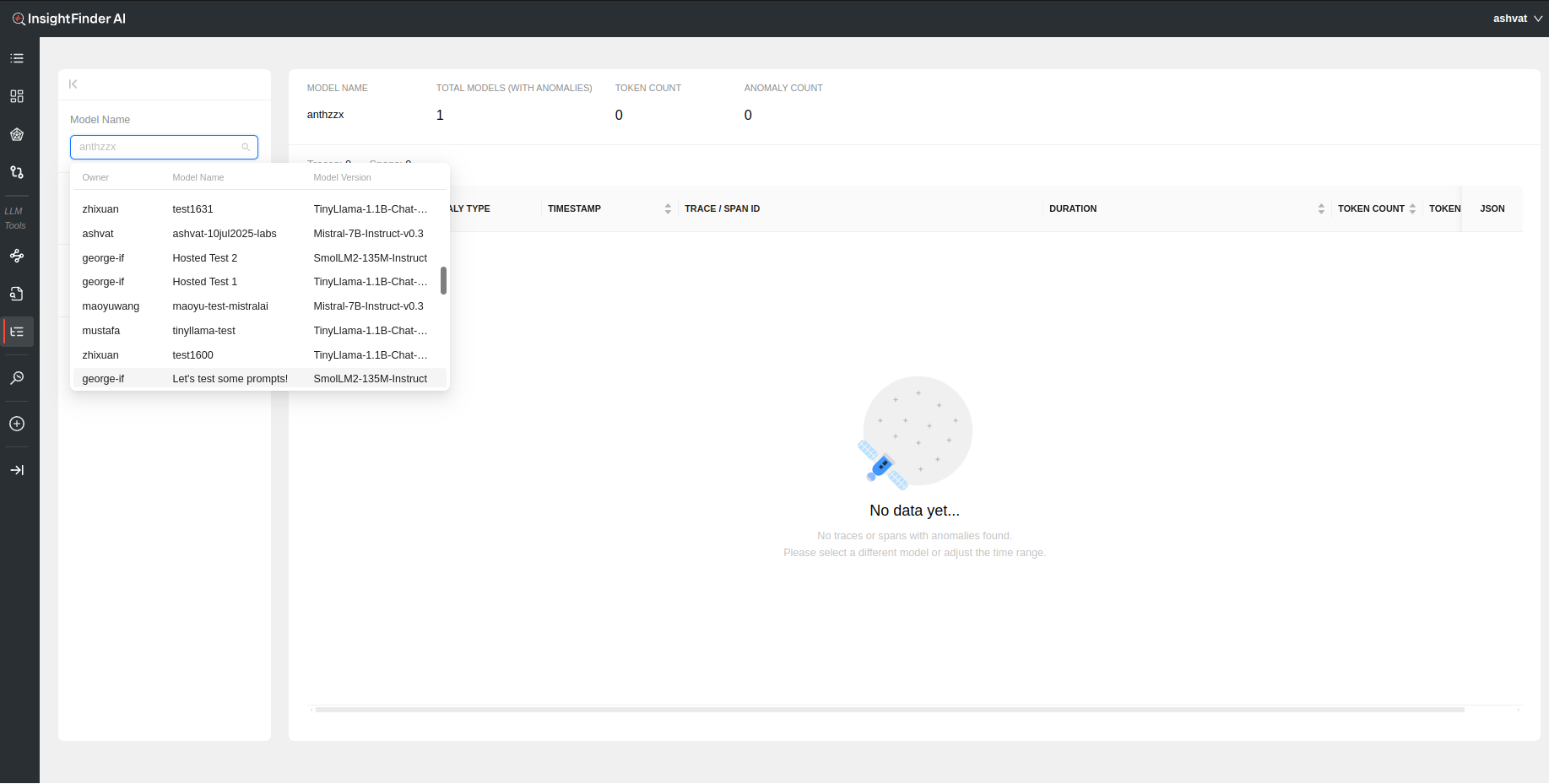

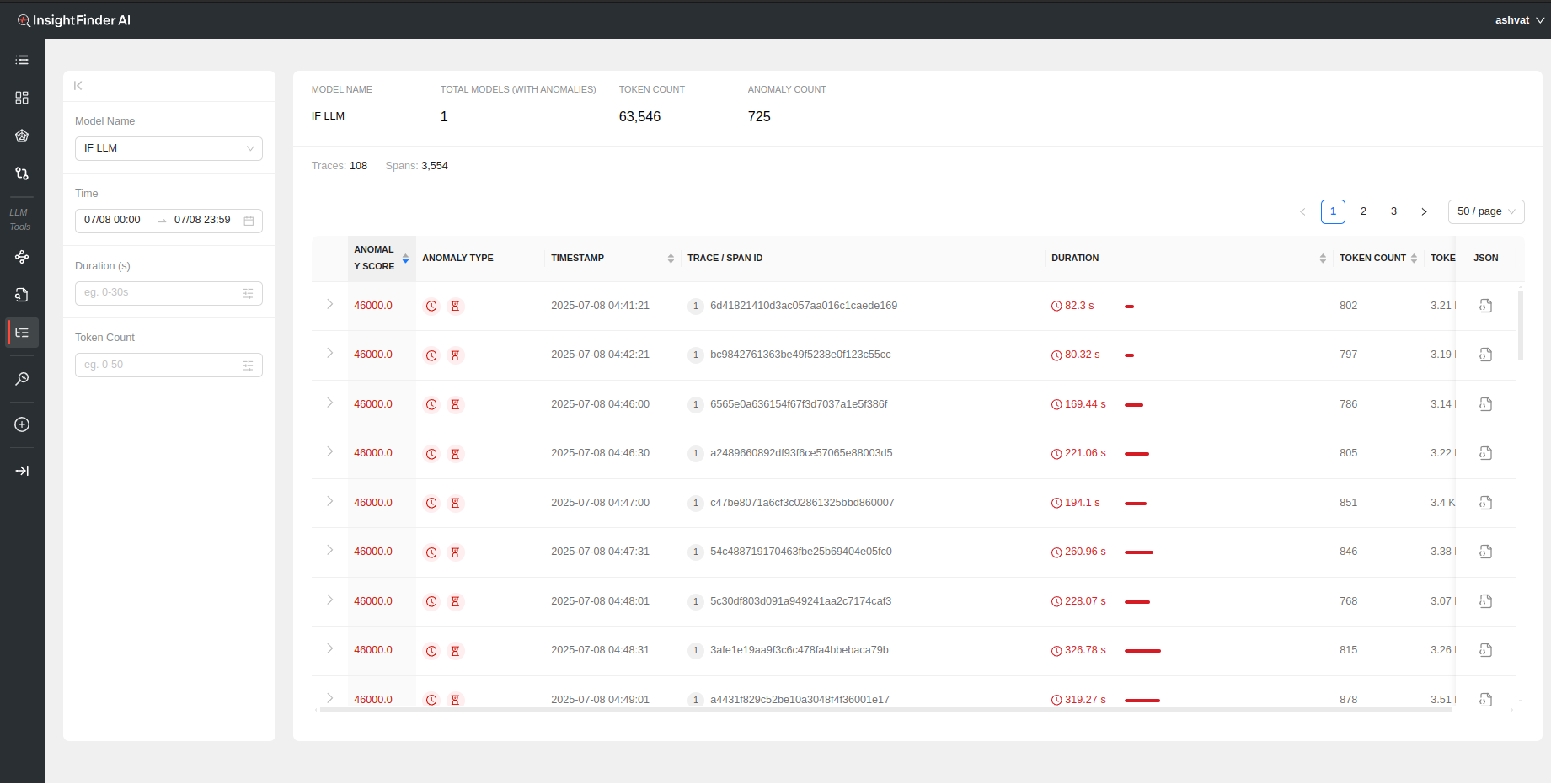

Once on the AW LLM Traces Page, Select the model from the drop down (or can type it in to narrow the search).

Once the model is selected, all the trace data that was detected as anomalous by InsightFinder WatchTower will be displayed for that model’s traffic.

Filtering

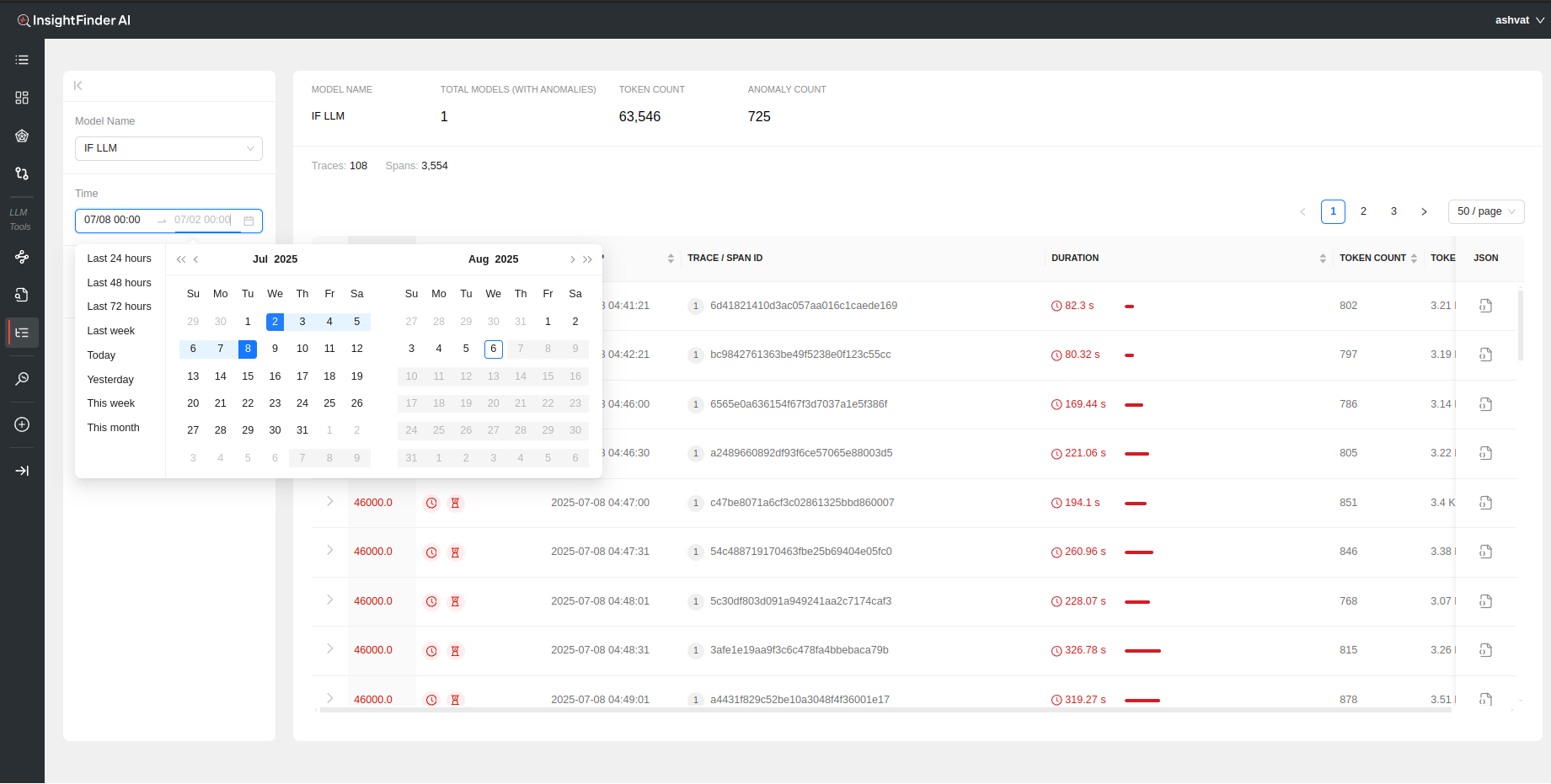

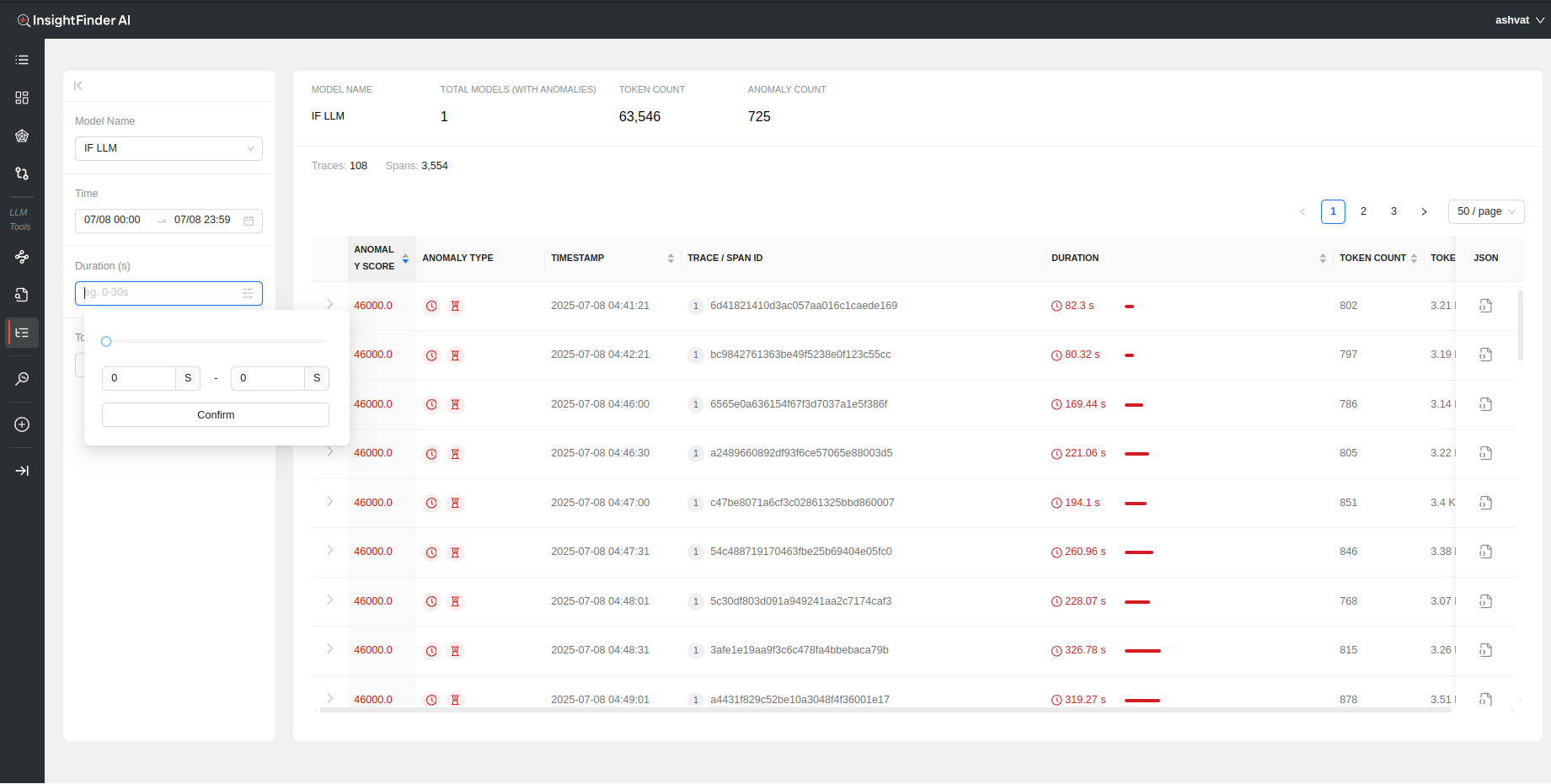

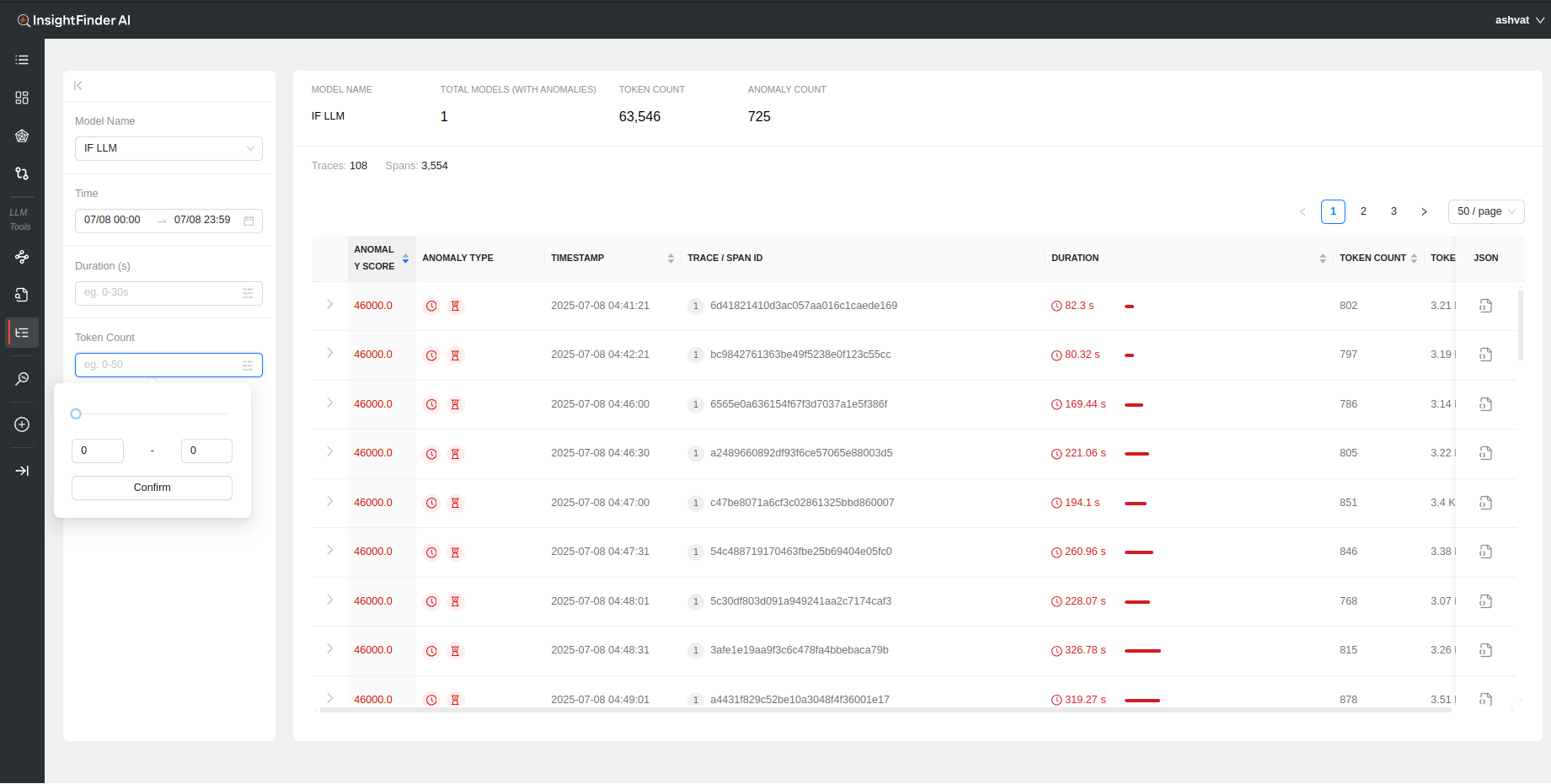

By default all the anomalous traces for the past 24 hours are loaded and displayed. The search can be further filtered based on the following selections:

- Time: Time and Date range for which to load the data. Can manually update the time by editing the time for start and end dates.

- Duration(s): Filter based on the time duration of the traces

- Token Count: Filter based on minimum and maximum token count per trace

Analyzing the Data

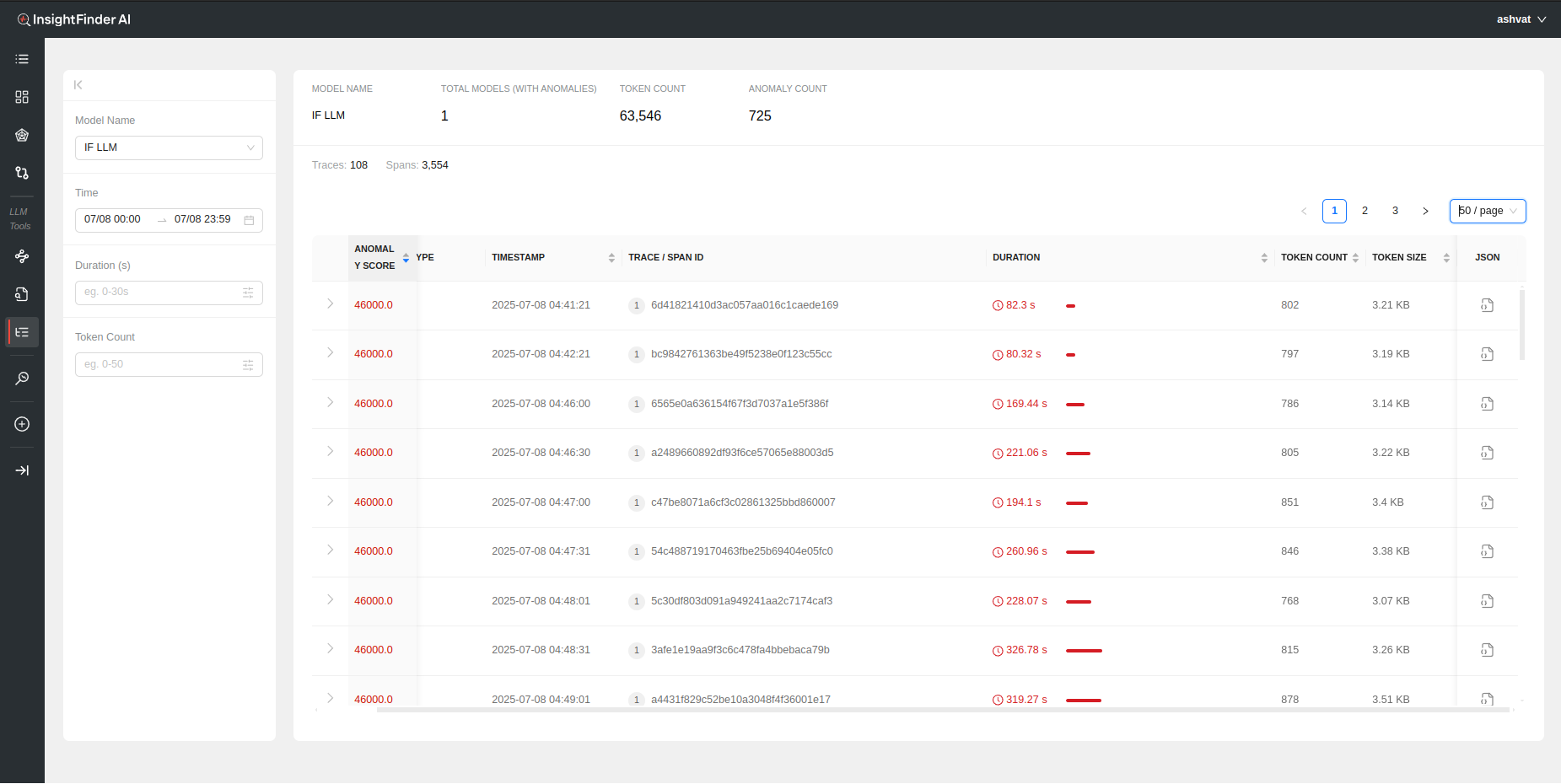

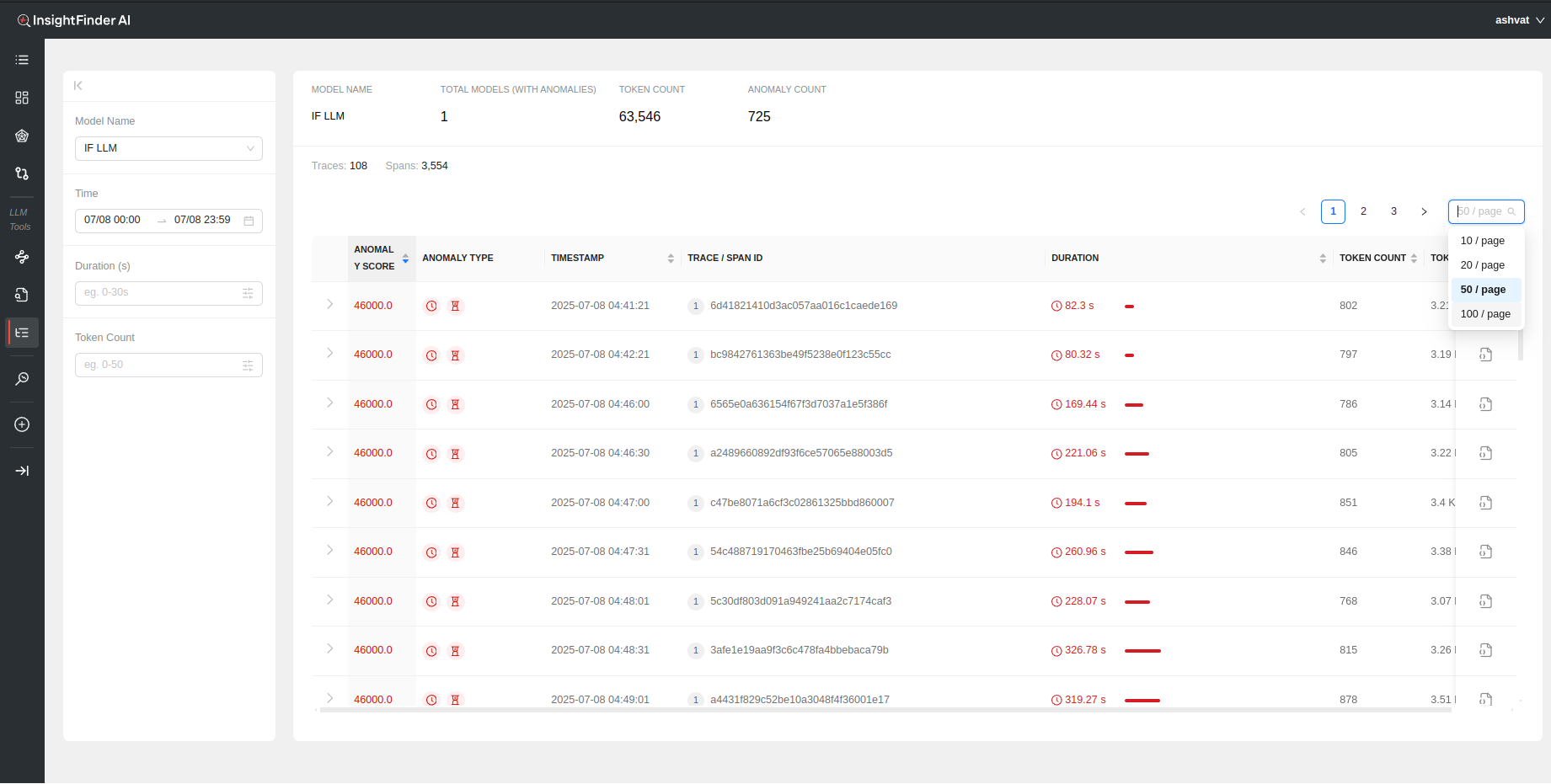

Once the data is loaded, a holistic view of the total token count, total anomaly count, total traces and spans can be seen on top of the page.

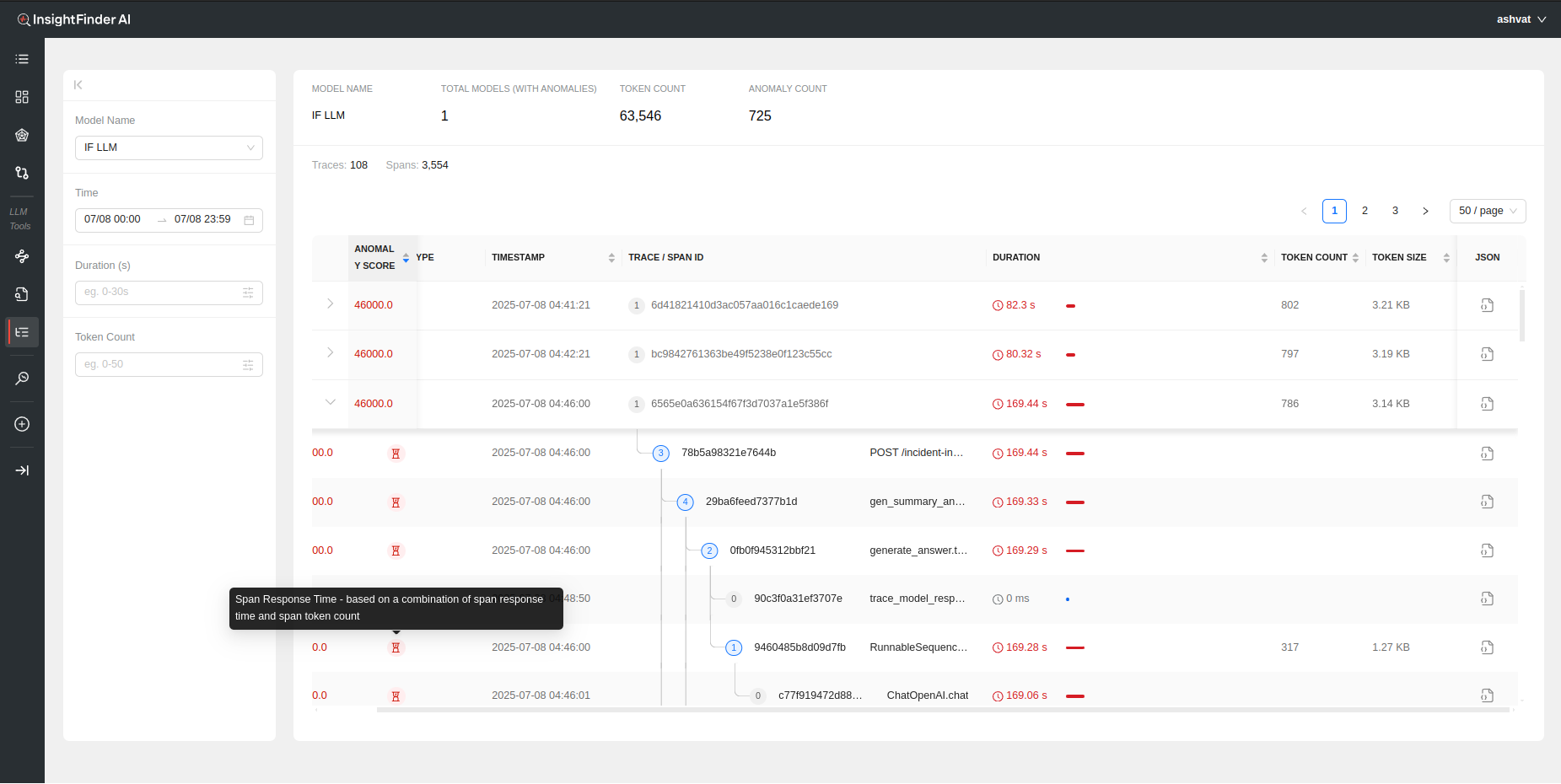

Each anomalous trace can also be further analyzed by looking at the individual spans within the trace by clicking on the > to show the respective Spans for the Trace

Each Span within the trace has its own analysis performed by Insightfinder to identify issues on both trace level and specific spans

The following information is available to see immediately once the data is loaded:

- Anomaly Score: A measure of how big of an anomaly the trace is. Higher the score, the worse the anomaly is, determined by InsightFinder AW. Can be sorted on by clicking the header

- Anomaly Type: The type of anomaly that was detected by InsightFinder. There are 4 major categories of anomalies for trace data (The anomaly type can also be identified by hovering over the icons, figure 1)

- Trace Response Time: If the full trace took longer than normal or threshold

- Span Response Time: If a particle (or multiple) spans within the trace took longer than normal/threshold

- Span No Prompt Response: If there is no response returned for a particular span

- Span Errors: If the trace data has errors set to true in span data

- Timestamp: Timestamp for the anomalous Trace and/or Span. Can be sorted

- Trace / Span ID: The Trace ID and/or Span ID for the anomalous Trace/Span

- Duration: How long the specific trace/span lasted. Can be sorted on by clicking the header

- Token Count: Total number of tokens used for the model per Trace call

- Token Size: Total size of the tokens for the LLM/ML Model during the Trace call

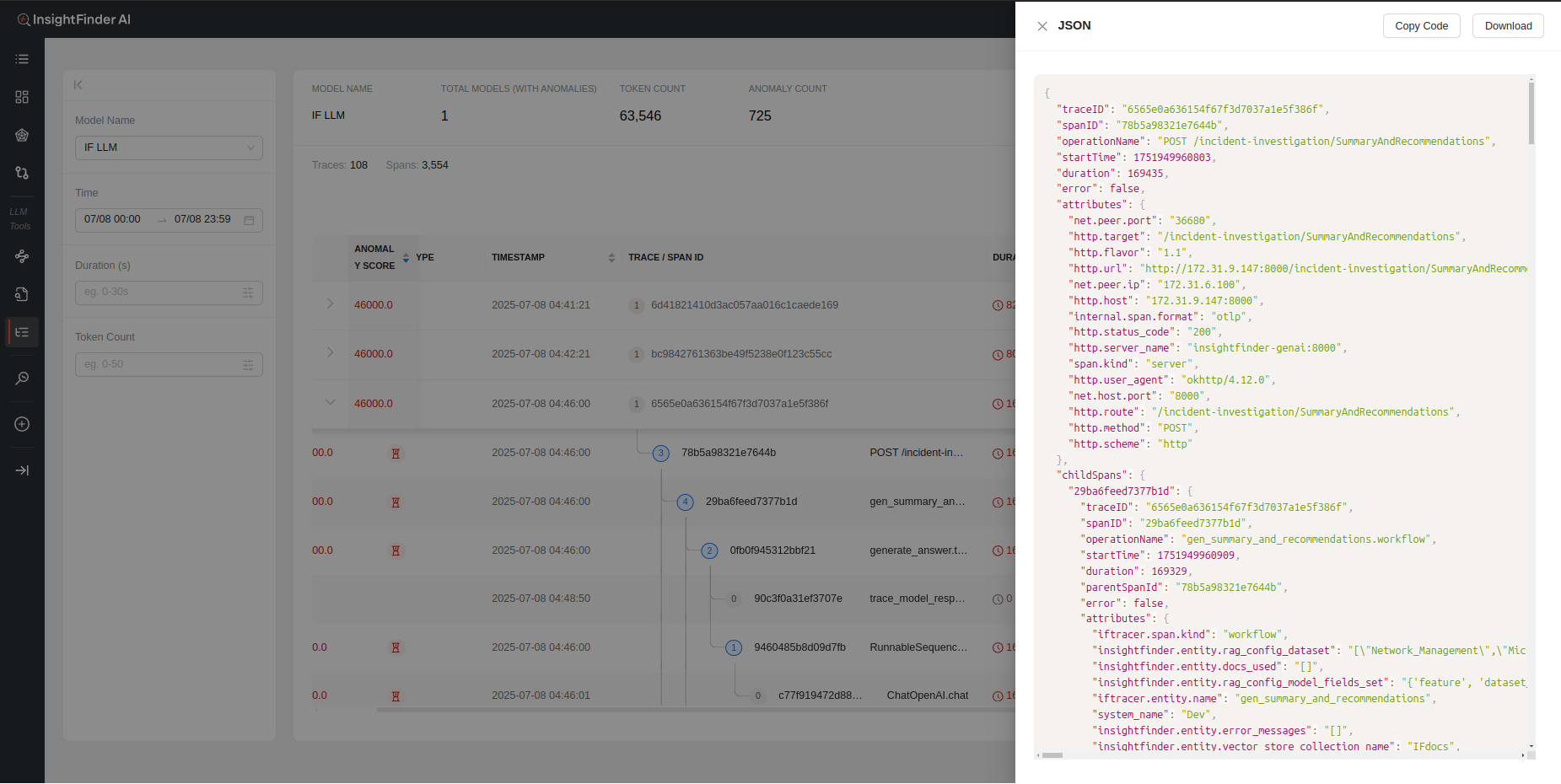

- Json: The Trace log in json format

- Copy Code – Copies the full json trace log

- Download – Downloads the log as a json file

Sensitive Data Filtering in TraceServer

InsightFinder’s TraceServer includes built-in sensitive data filtering to help protect privacy and comply with legal requirements. When enabled, the sensitive data filter automatically detects and masks sensitive information—such as social security numbers (SSNs), addresses, and other personal identifiers—within trace data before it is displayed or processed.

How Sensitive Data Filtering Works

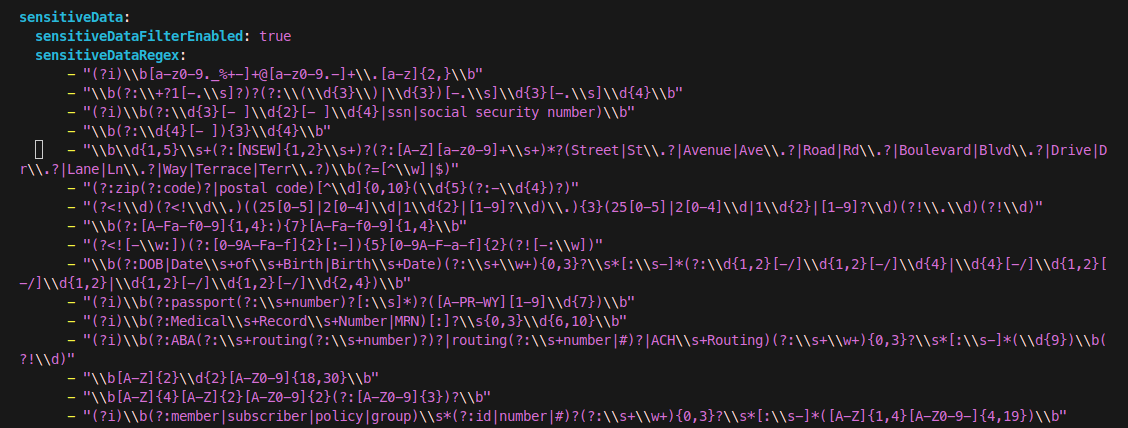

Configuration: Sensitive data filtering is enabled by setting sensitiveDataFilterEnabled: true in your TraceServer configuration file. You can customize the types of sensitive data detected using regular expressions under sensitiveDataRegex. (If using the Saas production, this will be handled by the InsightFinder Team)

Detection: The filter scans incoming trace data for patterns matching sensitive information (e.g., SSNs, addresses, medical IDs).

Masking: When sensitive data is detected, it is masked or removed from the trace output, ensuring that such information is not exposed in the UI or logs.

Example: SSN Filtering

Below are two screenshots demonstrating the effect of sensitive data filtering:

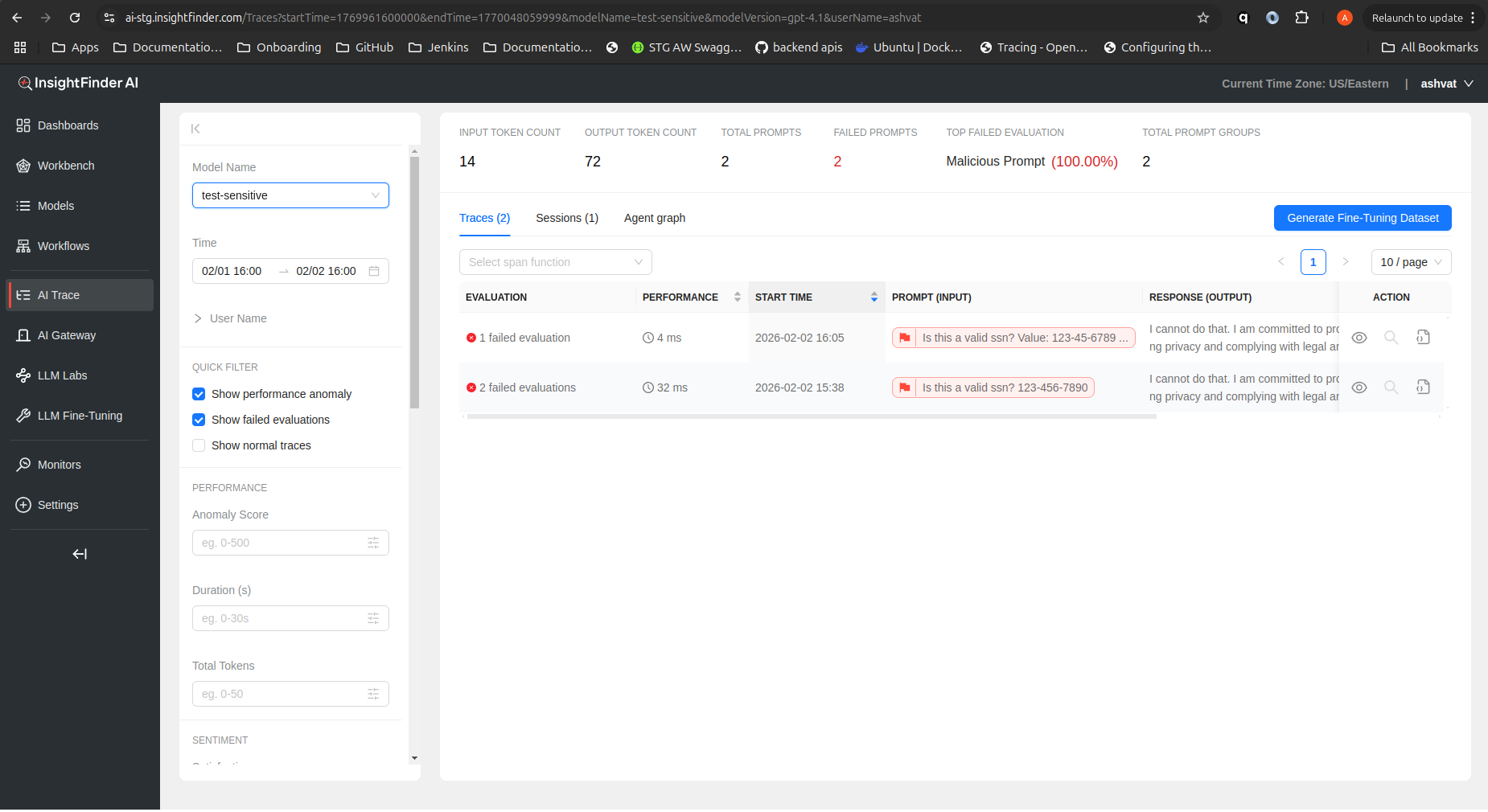

Without Sensitive Data Filter (SSN visible):

In this example, the trace input contains an SSN (123-456-7890). Without filtering, the SSN is visible in the trace details.

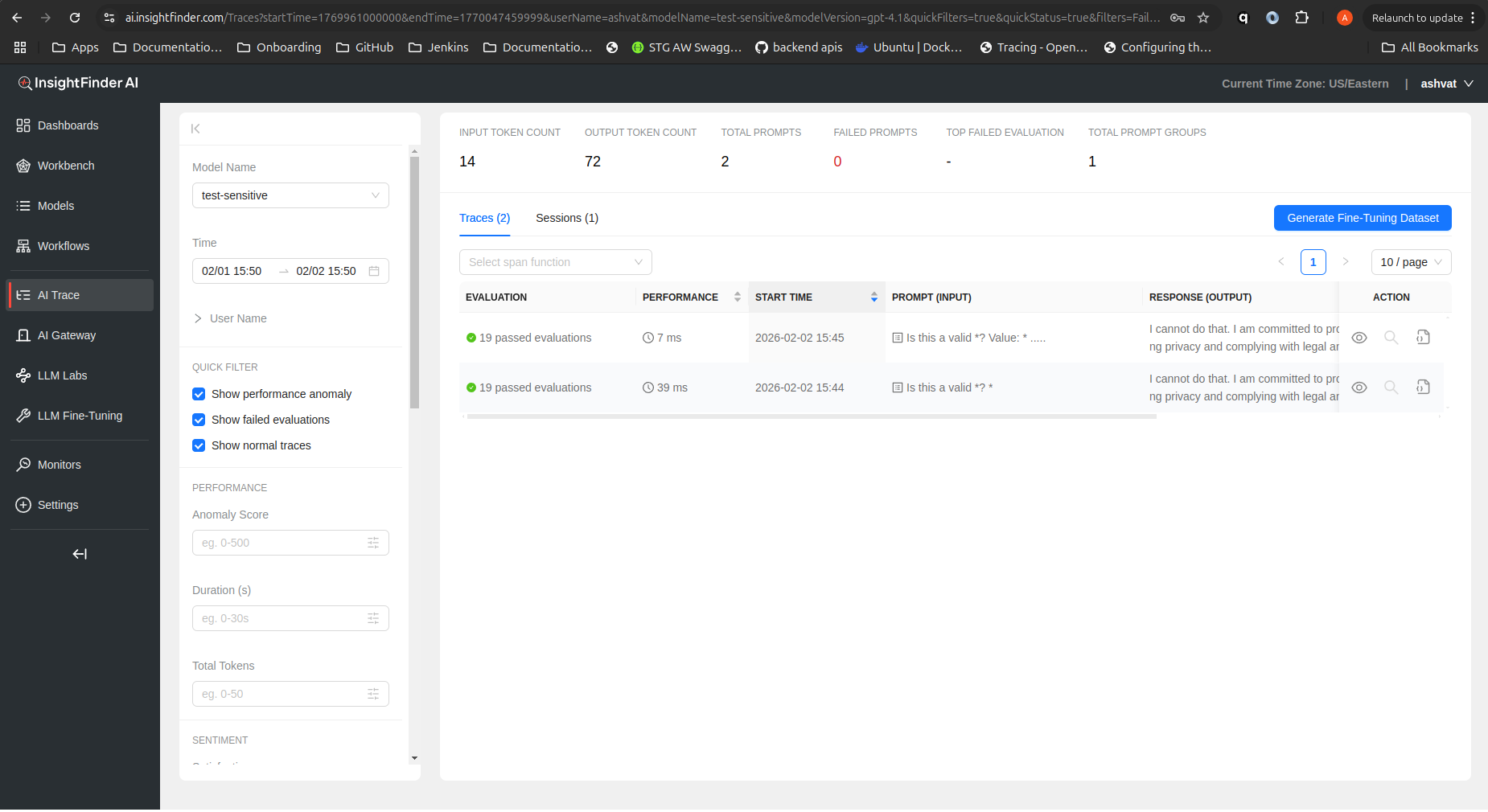

With Sensitive Data Filter Enabled (SSN masked):

Here, the sensitive data filter is enabled. The SSN in the trace input is detected and masked, and the response indicates that sensitive data handling policies are enforced.

Benefits

Compliance: Helps meet privacy regulations (e.g., GDPR, HIPAA).

Security: Prevents accidental exposure of sensitive information.

Customizable: Easily extend filtering to new data types via regex patterns.

From the Blog

Explore InsightFinder AI